The phrase "long-termism" is occupying an increasing share of EA community "branding". For example, the Long-Term Future Fund, the FTX Future Fund ("we support ambitious projects to improve humanity's long-term prospects"), and the impending launch of What We Owe The Future ("making the case for long-termism").

Will MacAskill describes long-termism as:

I think this is an interesting philosophy, but I worry that in practical and branding situations it rarely adds value, and might subtract it.

In The Very Short Run, We're All Dead

AI alignment is a central example of a supposedly long-termist cause.

But Ajeya Cotra's Biological Anchors report estimates a 10% chance of transformative AI by 2031, and a 50% chance by 2052. Others (eg Eliezer Yudkowsky) think it might happen even sooner.

Let me rephrase this in a deliberately inflammatory way: if you're under ~50, unaligned AI might kill you and everyone you know. Not your great-great-(...)-great-grandchildren in the year 30,000 AD. Not even your children. You and everyone you know. As a pitch to get people to care about something, this is a pretty strong one.

But right now, a lot of EA discussion about this goes through an argument that starts with "did you know you might want to assign your descendants in the year 30,000 AD exactly equal moral value to yourself? Did you know that maybe you should care about their problems exactly as much as you care about global warming and other problems happening today?"

Regardless of whether these statements are true, or whether you could eventually convince someone of them, they're not the most efficient way to make people concerned about something which will also, in the short term, kill them and everyone they know.

The same argument applies to other long-termist priorities, like biosecurity and nuclear weapons. Well-known ideas like "the hinge of history", "the most important century" and "the precipice" all point to the idea that existential risk is concentrated in the relatively near future - probably before 2100.

The average biosecurity project being funded by Long-Term Future Fund or FTX Future Fund is aimed at preventing pandemics in the next 10 or 30 years. The average nuclear containment project is aimed at preventing nuclear wars in the next 10 to 30 years. One reason all of these projects are good is that they will prevent humanity from being wiped out, leading to a flourishing long-term future. But another reason they're good is that if there's a pandemic or nuclear war 10 or 30 years from now, it might kill you and everyone you know.

Does Long-Termism Ever Come Up With Different Conclusions Than Thoughtful Short-Termism?

I think yes, but pretty rarely, in ways that rarely affect real practice.

Long-termism might be more willing to fund Progress Studies type projects that increase the rate of GDP growth by .01% per year in a way that compounds over many centuries. "Value change" type work - gradually shifting civilizational values to those more in line with human flourishing - might fall into this category too.

In practice I rarely see long-termists working on these except when they have shorter-term effects. I think there's a sense that in the next 100 years, we'll either get a negative technological singularity which will end civilization, or a positive technological singularity which will solve all of our problems - or at least profoundly change the way we think about things like "GDP growth". Most long-termists I see are trying to shape the progress and values landscape up until that singularity, in the hopes of affecting which way the singularity goes - which puts them on the same page as thoughtful short-termists planning for the next 100 years.

Long-termists might also rate x-risks differently from suffering alleviation. For example, suppose you could choose between saving 1 billion people from poverty (with certainty), or preventing a nuclear war that killed all 10 billion people (with probability 1%), and we assume that poverty is 10% as bad as death. A short-termist might be indifferent between these two causes, but a long-termist would consider the war prevention much more important, since they're thinking of all the future generations who would never be born if humanity was wiped out.

In practice, I think there's almost never an option to save 1 billion people from poverty with certainty. When I said that there was, that was a hack I had to put in there to make the math work out so that the short-termist would come to a different conclusion from the long-termist. A 1/1 million chance of preventing apocalypse is worth 7,000 lives, which takes $30 million with GiveWell style charities. But I don't think long-termists are actually asking for $30 million to make the apocalypse 0.0001% less likely - both because we can't reliably calculate numbers that low, and because if you had $30 million you could probably do much better than 0.0001%. So I'm skeptical that problems like this are likely to come up in real life.

When people allocate money to causes other than existential risk, I think it's more often as a sort of moral parliament maneuver, rather than because they calculated it out and the other cause is better in a way that would change if we considered the long-term future.

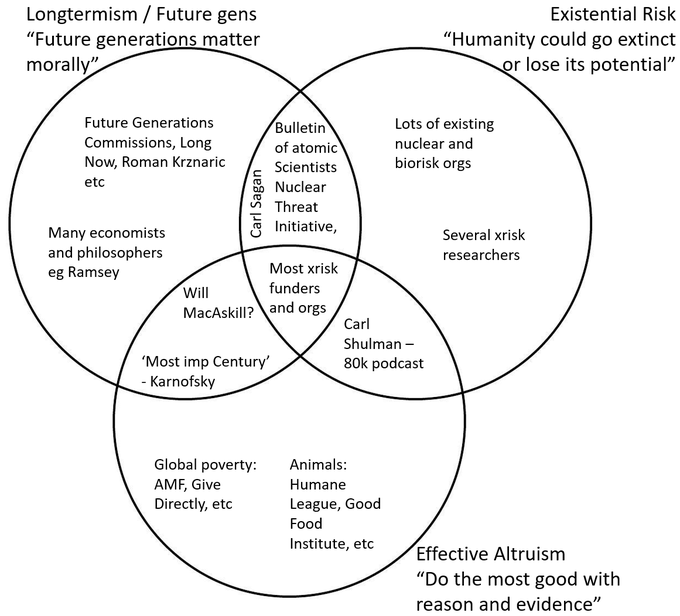

"Long-termism" vs. "existential risk"

Philosophers shouldn't be constrained by PR considerations. If they're actually long-termist, and that's what's motivating them, they should say so.

But when I'm talking to non-philosophers, I prefer an "existential risk" framework to a "long-termism" framework. The existential risk framework immediately identifies a compelling problem (you and everyone you know might die) without asking your listener to accept controversial philosophical assumptions. It forestalls attacks about how it's non-empathetic or politically incorrect not to prioritize various classes of people who are suffering now. And it focuses objections on the areas that are most important to clear up (is there really a high chance we're all going to die soon?) and not on tangential premises (are we sure that we know how our actions will affect the year 30,000 AD?)

I'm interested in hearing whether other people have different reasons for preferring the "long-termism" framework that I'm missing.

Hey Scott - thanks for writing this, and sorry for being so slow to the party on this one!

I think you’ve raised an important question, and it’s certainly something that keeps me up at night. That said, I want to push back on the thrust of the post. Here are some responses and comments! :)

The main view I’m putting forward in this comment is “we should promote a diversity of memes that we believe, see which ones catch on, and mould the ones that are catching on so that they are vibrant and compelling (in ways we endorse).” These memes include both “existential risk” and “longtermism”.

What is longtermism?

The quote of mine you give above comes from Spring 2020. Since then, I’ve distinguished between longtermism and strong longtermism.

My current preferred slogan definitions of each:

In WWOTF, I promote the weaker claim. In recent podcasts, I’ve described it something like the the following (depending on how flowery I’m feeling at the time):

I prefer to promote longtermism rather than strong longtermism. It's a weaker claim and so I have a higher credence in it, and I feel much more robustly confident in it; at the same time, it gets almost all the value because in the actual world strong longtermism recommends the same actions most of the time, on the current margin.

Is existential risk a more compelling intro meme than longtermism?

My main take is: What meme is good for which people is highly dependent on the person and the context (e.g., the best framing to use in a back-and-forth conversation may be different from one in a viral tweet). This favours diversity; having a toolkit of memes that we can use depending on what’s best in context.

I think it’s very hard to reason about which memes to promote, and easy to get it wrong from the armchair, for a bunch of reasons:

Then, at least when we’re comparing (weak) longtermism with existential risk, it’s not obvious which resonates better in general. (If anything, it seems to me that (weak) longtermism does better.) A few reasons:

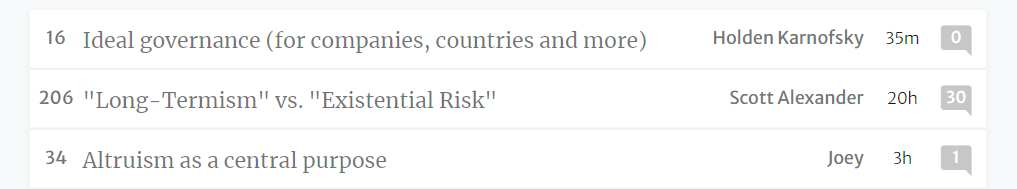

First, message testing from Rethink suggests that longtermism and existential risk have similarly-good reactions from the educated general public, and AI risk doesn’t do great. The three best-performing messages they tested were:

So people actually pretty like messages that are about unspecified, and not necessarily high-probability threats, to the (albeit nearer-term) future.

As terms to describe risk, “global catastrophic risk” and “long-term risk” did the best, coming out a fair amount better than “existential risk”.

They didn’t test a message about AI risk specifically. The related thing was how much the government should prepare for different risks (pandemics, nuclear, etc), and AI came out worst among about 10 (but I don’t think that tells us very much).

Second, most media reception of WWOTF has been pretty positive so far. This is based mainly on early reviews (esp trade reviews), podcast and journalistic interviews, and the recent profiles (although the New Yorker profile was mixed). Though there definitely has been some pushback (especially on Twitter), I think it’s overall been dwarfed by positive articles. And the pushback I have gotten is on the Elon endorsement, association between EA and billionaires, and on standard objections to utilitarianism — less so to the idea of longtemism itself.

Third, anecdotally at least, a lot of people just hate the idea of AI risk (cf Twitter), thinking of it as a tech bro issue, or doomsday cultism. This has been coming up in the twitter response to WWOTF, too, even though existential risk from AI takeover is only a small part of the book. And this is important, because I’d think that the median view among people working on x-risk (including me) is that the large majority of the risk comes from AI rather than bio or other sources. So “holy shit, x-risk” is mainly, “holy shit, AI risk”.

Do neartermists and longtermists agree on what’s best to do?

Here I want to say: maybe. (I personally don’t think so, but YMMV.) But even if you do believe that, I think that’s a very fragile state of affairs, which could easily change as more money and attention flows into x-risk work, or if our evidence changes, and I don’t want to place a lot of weight on it. (I do strongly believe that global catastrophic risk is enormously important even in the near term, and a sane world would be doing far, far better on it, even if everyone only cared about the next 20 years.)

More generally, I get nervous about any plan that isn’t about promoting what we fundamentally believe or care about (or a weaker version of what we fundamentally believe or care about, which is “on track” to the things we do fundamentally believe or care about).

What I mean by “promoting what we fundamentally believe or care about”:

Examples of people promoting means rather than goals, and this going wrong:

Examples of how this could go wrong by promoting “holy shit x-risk”:

So, overall my take is:

Thanks for explaining, really interesting and glad so much careful thinking is going into communication issues!

FWIW I find the "meme" framing you use here offputting. The framing feels kinda uncooperative, as if we're trying to trick people into believing in something, instead of making arguments to convince people who want to understand the merits of an idea. I associate memes with ideas that are selected for being easy and fun to spread, that likely affirm our biases, and that mostly without the constraint whether the ideas are convincing upon refl... (read more)