Summary

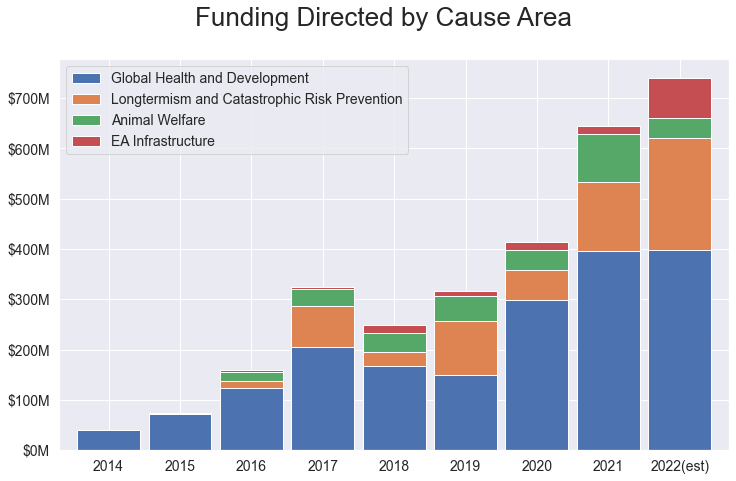

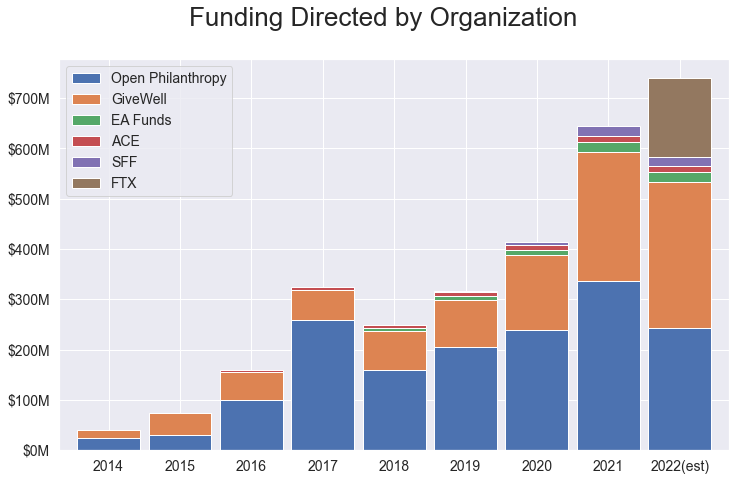

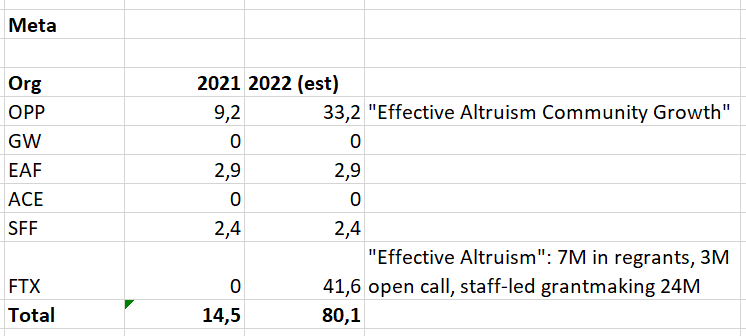

I have consolidated publicly available grants data from EA organizations into a spreadsheet, which I intend to update periodically[1]. Totals pictured below.

(edit: swapped color palette to make graphs easier to read)

Observations

- $2.6Bn in grants on record since 2012, about 63% of which went to Global Health.

- With the addition of FTX and impressive fundraising by GiveWell, Animal Welfare looks even more neglected in relative terms—effective animal charities will likely receive something like 5% of EA funding in 2022, the smallest figure since 2015 by a wide margin.

Notes on the data

NB: This is just one observer's tally of public data. Sources are cited in the spreadsheet; I am happy to correct any errors as they are pointed out.

GiveWell:

- GiveWell uses a 'metrics year' starting 1 Feb (all other sources were tabulated by calendar year).

- GiveWell started breaking out 'funds directed' vs 'funds raised' for metrics year 2021. Previous years refer to 'money moved', which is close but not exactly the same.

- I have excluded funds directed through GiveWell by Open Phil and EA Funds, as those are already included in this data set.

Open Phil

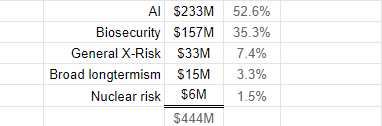

- Open Phil labels their grants using 25 'focus areas'. My subjective mapping to broader cause area is laid out in the spreadsheet.

- Note that about 20% of funds granted by Open Phil have gone to 'other' areas such as Criminal Justice Reform; these are omitted from the summary figures but still tabulated elsewhere in the spreadsheet.

General

- 2022 estimates are a bit speculative, but a reasonable guess as to how funding will look with the addition of the Future Fund.

- The total Global Health figure for 2021 (~$400M) looks surprisingly low considering e.g. that GiveWell just reported over $500M funds directed for 2021 (including Open Phil and EA Funds). I think that this is accounted for by (a) GiveWell's metrics year extending through Jan '22, (Open Phil reported $26M of Global Health grants that month), and (b) the possibility that some of this was 'directed' i.e. 'firm commitment of $X to org Y' by Open Phil in 2021, but paid out or recorded to the grants database months later; still seeking explicit confirmation here.

Future work

If there is any presently available data that seems worth adding, let me know and I may consider it.

I may be interested in a more comprehensive analysis on this topic, e.g. using the full budget of every GiveWell-recommended charity. I'd be interested to hear if anyone has access to this type of data, or if this type of project seems particularly valuable.

Thanks to Niel Bowerman for helpful comments

- ^

Currently the bottleneck to synchronizing data is the GiveWell annual metrics report, which is typically published in the second half of the following year. I may update more often if that is useful.

Thanks for doing and sharing this, really interesting!

Random curiosity, how did your spreadsheet make it into the time.com article about EA?

Naina (the Time journalist) and I were chatting about the aggregate funding data but couldn’t quickly find a source. I connected Naina and Tyler to work on this together. Tyler pulled together the data in part for the Time article.