This is a special post for quick takes by RyanCarey. Only they can create top-level comments. Comments here also appear on the Quick Takes page and All Posts page.

Should we fund people for more years at a time? I've heard that various EA organisations and individuals with substantial track records still need to apply for funding one year at a time, because they either are refused longer-term funding, or they perceive they will be.

For example, the LTFF page asks for applications to be "as few as possible", but clarifies that this means "established organizations once a year unless there is a significant reason for submitting multiple applications". Even the largest organisations seem to only receive OpenPhil funding every 2-4 years. For individuals, even if they are highly capable, ~12 months seems to be the norm.

Offering longer (2-5 year) grants would have some obvious benefits:

Grantees spend less time writing grant applications

Evaluators spend less time reviewing grant applications

Grantees plan their activities longer-term

The biggest benefit, though, I think, is that:

Grantees would have greater career security.

Job security is something people value immensely. This is especially true as you get older (something I've noticed tbh), and would be even moreso for someone trying to raise kids. In the EA economy, many people get by on short-term gr... (read more)

Lots of my favorite EA people seem to think this is a good idea, so I'll provide a dissenting view: job security can be costly in hard-to-spot ways.

I notice that the places that provide the most job security are also the least productive per-person (think govt jobs, tenured professors, big tech companies). The typical explanation goes like "a competitive ecosystem, including the ability for upstarts to come in and senior folks to get fired, leads to better services provided by the competitors"

I think respondents on the EA Forum may think "oh of course I'd love to get money for 3 years instead of 1". But y'all are pretty skewed in terms of response bias -- if a funder has $300k and give that all to the senior EA person for 3 years, they are passing up on the chance to fund other potentially better upstarts for years 2 & 3.

Depending on which specific funder you're talking about, they don't actually have years of funding in the bank! Afaict, most funders (such as the LTFF and Manifund) get funds to disburse over the next year, and in fact get chastised by their donors if they seem to be holding on to funds for longer than that. Donors themselves don't have years of foresight into

I would be surprised if people on freelancer marketplaces are exceptionally productive - I would guess they end up spending a lot more of their time trying to get jobs than actually doing the jobs.

I do think freelancers spend significant amounts of time on job searching, but I'm not sure that's evidence for "low productivity". Productivity isn't a function of total hours worked but rather of output delivered. The one time I engaged a designer on a freelancer marketplace, I got decent results exceptionally quickly. Another "freelancer marketplace" I make heavy use of is uber, which provides good customer experiences.

Of course, there's a question of whether such marketplaces are good for the freelancers themselves - I tend to think so (eg that the existence of uber is better for drivers than the previous taxi medallion system) but freelancing is not a good fit for many folks.

9

S.E. Montgomery

Do you have evidence for this? Because there is lots of evidence to the contrary - suggesting that job insecurity negatively impacts people's productivity as well as their physical, and mental health.[1][2][3].

This goes both ways - yes, there is a chance to fund other potential better upstarts, but by only offering short-term grants, funders also miss out on applicants who want/need more security (eg. competitive candidates who prefer more secure options, parents, people supporting family members, people with big mortgages, etc).

I think there are options here that would help both funders and individuals. For example, longer grants could be given with a condition that either party can give a certain amount of notice to end the agreement (typical in many US jobs), and many funders could re-structure to allow for longer grants/a different structure for grants if they wanted to. As long as these changes were well-communicated with donors, I don't see why we would be stuck to a 1-year cycle.

My experience: As someone who has been funded by grants in the past, job security was a huge reason for me transitioning away from this. It's also a complaint I've heard frequently from other grantees, and something that not everyone can even afford to do in the first place. I'm not implying that donors need to hire people or keep them on indefinitely, but even providing grants for 2 or more years at a time would be a huge improvement to the 1-year status quo.

7

Elizabeth

FWIW I can imagine being really happy under this system. Contingent on grantmaker/supervisor quality of course, and since those already seem to be seriously bottlenecked this doesn't feel like an easy solution to me. But I'd love to see it work out.

2

RyanCarey

I'm focused on how the best altruistic workers should be treated, and if you think that giving them job insecurity would create good incentives, I don't agree. We need the best altruistic workers to be rewarded not just better than the less productive altruists, but also better than those pursuing non-altruistic endeavours. It would be hard to achieve this if they do not have job security.

5

Austin

I'm sympathetic to treating good altruistic workers well; I generally advocate for a norm of much higher salaries than is typically provided. I don't think job insecurity per se is what I'm after per se, but rather allowing funders to fund the best altruistic workers next year, rather than being locked into their current allocations for 3 years.

The default in the for profit sector in the US is not multi-year guaranteed contracts but rather at will employment, where workers may leave a job or be fired for basically any reason. It may seem harsh in comparison to norms in other countries (eg the EU or Japan) but I also believe it leads to more productive allocation of workers to jobs.

Remember that effective altruism exists not to serve its workers, but rather to effectively help those in need (people in developing countries, suffering animals, people in the future!) There's instrumental benefits in treating EA workers well in terms of morale, fairness, and ability to recruit, but keep in mind the tradeoffs of less effective allocation schemes.

9

Larks

I think there's a big difference between "you are an at will employee, and we can fire you on two weeks notice, but the default is you will stay with us indefinitely" and "you have a one year contract and can re-apply at the end". Legally the latter gives the worker 50 extra weeks of security, but in practice the former seems to be preferable to many people.

5

Austin

I agree that default employment seems preferred by most fulltime workers, and that's why I'm interested in the concept of "default-recurring monthly grants".

I will note that this employment structure is not the typical arrangement among founders trying to launch a startup, though. A broad class of grants in EA are "work on this thing and maybe turn it into a new research org", and the equivalent funding norms in the tech sector at least are not "employment" but "apply for incubators, try to raise funding".

For EAs trying to do research... academia is the typical model for research but I also think academia is extremely inefficient, so copying the payment model doesn't seem like a recipe for success. FROs are maybe the closest thing I can think of to "multi-year stable grants" - but there's only like 3 of those in existence and it's very early to say if they produce good outcomes.

1

Aaron_Scher

What does FRO stand for?

4

Austin

"Focused Research Org"

3

RyanCarey

Yes, because when you are at at will employee, the chance that you will still have income in n years tends to be higher than if you had to apply to renew a contract, and you don't need to think about that application. People are typically angry if asked to reapply for their own job, because it implies that their employer might want to terminate them.

Another consideration is turn around time. Grant decisions are slow, deadlines are, imperfectly timed, distribution can be slow. So you need to apply many months before you need the money, which means well before you've used up your current grant, which means planning your work to have something to show ~6 months into a one year grant. Which is just pretty slow.

I don't know what the right duration is, but the process needs to be built so that continuous funding for good work is a possibility, and possible to plan around.

My hope for addressing that problem is for grantmakers (including us) to suck less in the future and have speedier turnaround times, especially reducing the long tail of very slow responses.

5

MichaelDickens

How feasible do you think this is? From my outsider perspective, I see grantmakers and other types of application-reviewers taking 3-6 months across the board and it's pretty rare to see them be faster than that, which suggests it might not be realistic to consistently review grants in <3 months.

eg the only job application process I've ever done that took <3 months was an application to a two-person startup.

2

Linch

It's a good question. I think several grantmaking groups local to us (Lightspeed grants, Manifund, the ill-fated FF regranting program) have promised and afaict delivered on a fairly fast timeline. Though all of them are/were young and I don't have a sense of whether they can reliably be quick after being around for longer than say 6 months.

LTFF itself has a median response time of about 4 weeks or so iirc. There might be some essential difficulties with significantly speeding up (say) the 99th percentile (eg needing stakeholder buy-in, complicated legal situations, trying to pitch a new donor for a specific project, trying to screen for infohazard concerns when none of the team are equipped to do so), but if we are able to get more capacity, I'd like us to at least get (say) the 85th or 95th tail down a lot lower.

I feel like reliability is more important than speed here, and it's ~impossible to get this level of reliability from orgs run by unpaid volunteers with day jobs. Especially when volunteer hours aren't fungible and the day jobs are demanding.

I think Lightspeed set a fairly ambitious goal it has struggled to meet. I applied for a fast turn around and got a response within a week, but two months later I haven't received the check. The main grants were supposed to be announced on 8/6 and AFAIK still haven't been. This is fine for me, but if people didn't have to plan around it or risk being screwed I think it would be better.

Based on some of Ozzie's comments here, I suspect that that using a grant process made for organizations to fund individuals is kind of doomed, and you either want to fund specific projects without the expectation it's someone's whole income (which is what I do), or do something more employer like with regular feedback cycles and rolling payments. And if you do the former, it needs to be enough money to compensate for the risk and inconvenience of freelancing.

I kind of get the arguments against paying grantmakers, but from my perspective I'd love to see you paid more with a higher reliability level.

Yeah, this is a bit sad. I am happy to offer anyone who received a venture grant to have some money advanced if they need it, and tried to offer this to people where I had a sense it might help.

I am sad that we can't get out final grant confirmations in time, though we did get back to the vast majority of applicants with a negative response on-time. I am also personally happy to give people probability estimates of whether they will get funding, which for some applicants is very high (95%+), which hopefully helps a bit. I also expect us to hit deadlines more exactly in future rounds.

2

Elizabeth

What would have had to change to make the date? My impression was the problem was sheer volume, which sounds really hard and maybe understandable to prep for. You can put a hard cap, but that loses potentially good applications. You could spend less time per application, but that biases towards legibility and familiarity. You could hire more evaluators, but my sense is good ones are hard to find and have costly counterfactuals.

The one thing I see is "don't use a procedure that requires evaluating everyone before giving anyone an answer" (which I think you are doing?), but I assume there are reasons for that.

6

Habryka [Deactivated]

The central bottleneck right now is getting funders to decide how much money to distribute, and through which evaluators to distribute the funds through.

I budgeted around a week for that, but I think realistically it will take more like 3. This part was hard to forecast because I don't have a super high-bandwidth channel to Jaan, his time is very valuable, and I think he was hit with a bunch of last-minute opportunities that made him busy in the relevant time period.

I also think even if that had happened on my original 7 day timeline, we still would have been delayed by 4 days or so, since the volume caused me to make some mistakes in the administration which then required the evaluators going back and redoing some of their numbers and doing a few more evaluations.

2

Elizabeth

I wonder if some more formalized system for advances would be worth it? I think this kind of chaos is one of the biggest consequences of delays for payments, and a low-friction way to get bridge loans would remove a lot of the costs.

You could run it as pure charity or charge reasonable fees to grantmakers for the service.

2

Habryka [Deactivated]

Yeah, I would quite like that. In some sense the venture granting system we have is trying to be exactly that, but that itself is currently delayed on getting fully set up legally and financially. But my current model is that as soon as its properly set up, we can just send out money on like a 1-2 day turnaround time, reliably.

2

Linch

EA Funds offers pay to grantmakers (~$60/h which I think should be fairly competitive with people's nonprofit counterfactuals); most people have other day jobs however.

I don't think pay is the limiting factor for people, who usually have day jobs and also try to do what they consider to be the highest impact (plus other personal factors).

Though I can imagine there might be some sufficiently high numbers to cause part-time grantmakers to prioritize grantmaking highly, but a) this might result in a misallocation of resources, b) isn't necessarily sustainable, and c) might have pretty bad incentives.

Great point about the reliability overall though. I do think it'd be hard to make real promises of the form "we'll definitely get back to you by X date" for various factors, including practical/structural ones.

7

Elizabeth

It wouldn't surprise me if there was no good way to speed up grantmaking, given the existing constraints. If that's true, I'd love to shift the system to something that recognizes that and plans around it (through bridge loans, or big enough grants that people can plan around delays, or something that looks more like employment), rather than hope that next year is the year that will be different for unspecified reasons.

I agree - I think the financial uncertainty created by having to renew funding each year is very significantly costly and stressful and makes it hard to commit to longer-term plans.

I think that the OpenPhil situation can be decent. If you have a good manager/grantmaker that you have a good relationship and a lot of trust in, then I think this could provide a lot of the benefit. You don't have assurance, but you should have a solid understanding of what you need to accomplish in order to get funding or not - and thus, this would give you a good idea of your chances.

I think that the non-OP funders are pretty amateurish here and could improve a lot.

I think the situation now is pretty lacking. However, the most obvious/suggested fix is more like "better management and ongoing relationships", rather than 3+ year grants. The former could basically be the latter, if desired (once you start getting funding, it's understood to be very likely to continue, absent huge mess-ups).

Just for the record, I don't think OP has been doing well in this respect since the collapse of FTX. My sense is few Open Phil grantees know the conditions under which they would get more funding, or when they might receive an answer. At least I don't, and none of the Open Phil grantees I've talked to in the past few months about this felt like this was handled well either.

No idea if it would be better to pay in lump sums or tranches.

You could also pay in a tailing off fashion, where if you fund for $1mn next year you automatically fund for 200k the year after. That way orgs would always have money for a slow down in funding.

4

rime

Paying people for what they do works great if most of their potential impact comes from activities you can verify. But if their most effective activities are things they have a hard time explaining to others (yet have intrinsic motivation to do), you could miss out on a lot of impact by requiring them instead to work on what's verifiable.

Perhaps funders should consider granting motivated altruists multi-year basic income. Now they don't have to compromise[1] between what's explainable/verifiable vs what they think is most effective—they now have independence to purely pursue the latter.

Bonus point: People who are much more competent than you at X[2] will probably behave in ways you don't recognise as more competent. If you could, they wouldn't be much more competent. Your "deference limit" is the level of competence above which you stop being able to reliable judge the difference between experts.

1. ^

Consider how the cost of compromising between optimisation criteria interacts with what part of the impact distribution you're aiming for. If you're searching for a project with top p% impact and top p% explainability-to-funders, you can expect only p^2 of projects to fit both criteria—assuming independence.

But I think it's an open question how & when the distributions correlate. One reason to think they could sometimes be anticorrelated is that the projects with the highest explainability-to-funders are also more likely to receive adequate attention from profit-incentives alone.

If you're doing conjunctive search over projects/ideas for ones that score above a threshold for multiple criteria, it matters a lot which criteria you prioritise most of your parallel attention on to identify candidates for further serial examination. Try out various examples here & here.

2. ^

At least for hard-to-measure activities where most of the competence derives from knowing what to do in the first place. I reckon this includes most fields o

4

Jackson Wagner

Some discussion of the "career security" angle, in the form of me asking how 1-year-grant recipients are conceiving of their longer-term career arcs: https://forum.effectivealtruism.org/posts/KdhEAu6pFnfPwA5hf/grantees-how-do-you-structure-your-finances-and-career

3

porby

In the absence of longer-term security, it would be nice to have more foresight about income.

I can tolerate quite a lot of variance on the 6+ month timescale if I know it's coming. If I know I'm not going to get another grant in 6 months, there are things I can do to compensate.

A mistake I made recently is applying relatively late due to an incorrect understanding of the funding situation, getting rejected, and now I'm temporarily unable to continue safety research. (Don't worry, I'm no danger of going homeless or starving, just lowered productivity.)

Applying for grants sooner would help mitigate this, but there are presumably limits. I would guess that grantmaking organizations would rather not commit funds to a project that won't start for another 2 years, for example.

If there was a magic way to get an informed probability on future grant funding, that would help a lot. I'm not sure how realistic this is; a lot of options seem pretty high overhead for grantmakers and/or involve some sort of fancy prediction markets with tons of transparency and market subsidies. Having continuous updates about some smaller pieces of the puzzle could help.

2

James Herbert

Yeah, this would be nice to have. CEA's Community Building Grants (CBG) programme had the possibility for 2-year renewals for a brief period, but this policy was recently scrapped due to funding uncertainty. Shardul from EA India shared with me his experience as the second hire at GFI India. If I recall correctly, they were given funding for something like 5 years to get going, and he said this transformed their ability to build and execute a vision. The value of this stability was brought into relief when he joined the CBG programme, which has similar aims but nowhere near the stability.

From a productivity point of view, I guess what we ought to aim for is flexicurity, as typified by Denmark. For those fortunate enough to live in parts of Northwestern Europe this is already the case thanks to strong social security, but I struggle to see how a movement can provide it elsewhere. Perhaps a Basefund but for employment?

1

Aaron_Scher

I think Ryan is probably overall right that it would be better to fund people for longer at a time. One counter-consideration that hasn't been mentioned yet: longer contracts implicitly and explicitly push people to keep doing something — that may be sub-optimal — because they drive up switching costs.

If you have to apply for funding once a year no matter what you're working on, the "switching costs" of doing the same thing you've been doing are similar to the cost of switching (of course they aren't in general, but with regard to funding they might be). I think it's unlikely but not crazy that the effects of status quo bias might be severe enough that funders artificially imposing switching costs on "continuing/non-switching" results in net better results. I expect that in the world where grants usually last 1 year, people switch what they're doing more than the world where grants are 3 years, and it's plausible these changes are good for impact.

Some factors that seem cruxy here:

* how much can be gained through realistic switching (how bad is current allocation of people, how much better are the things people would do if switching costs were relatively zero as they sorta are, how much worse of things would people be doing if continuing-costs were low).

* it seems very likely that this consideration should affect grants to junior and early-career people that are bouncing around more but it probably doesn't apply much to more senior folks who have been working on a project for awhile (on the other hand, maybe you want the junior people investing more in long term career plans, thus switching less, it depends on the person).

* Could relatively-zero switching costs actually hurt things because people switch too much due to over-correcting (e.g., holden karnofsky gets excited about AI evaluations so a bunch of people work on it and then governance gets big so people switch to that, and then etc.)

* How much does it help to have people with a lot of experi

2

RyanCarey

I agree. Early-career EAs are more likely to need to switch projects, less likely to merit multi-year funding, and have - on average - less need for job-security. Single-year funding seems OK for those cases.

For people and orgs with significant track records, however, it seems hard to justify.

Summary: The piece could give the reader the impression that Jacy, Felicifia and THINK played a comparably important role to the Oxford community, Will, and Toby, which is not the case.

I'll follow the chronological structure of Jacy's post, focusing first on 2008-2012, then 2012-2021. Finally, I'll discuss "founders" of EA, and sum up.

2008-2012

Jacy says that EA started as the confluence of four proto-communities: 1) SingInst/rationality, 2) Givewell/OpenPhil, 3) Felicifia, and 4) GWWC/80k (or the broader Oxford community). He also gives honorable mentions to randomistas and other Peter Singer fans. Great - so far I agree.

What is important to note, however, is the contributions that these various groups made. For the first decade of EA, most key community institutions of EA came from (4) - the Oxford community, including GWWC, 80k, and CEA, and secondly from (2), although Givewell seems to me to have been more of a grantmaking entity than a community hub. Although the rationality community provided many key ideas and introduced many key individuals to EA, the institutions that it ran, such as CFAR, were mostl... (read more)

Thanks for this, and for your work on Felicifia. As someone who's found it crucial to have others around me setting an example for me, I particularly admire the people who basically just figured out for themselves what they should be doing and then starting doing it.

Fwiw re THINK: I might be wrong in this recollection, but at the time it felt like very clearly Mark Lee's organisation (though Jacy did help him out). It also was basically only around for a year. The model was 'try to go really broad by contacting tonnes of schools in one go and getting hype going'. It was a cool idea which had precedent, but my impression was the experiment basically didn't pan out.

Regarding THINK, I personally also got the impression that Mark was a sole-founder, albeit one who managed other staff. I had just taken Jacy's claim of co-founding THINK at face value. If his claim was inaccurate, then clearly Jacy's piece was more misleading than I had realised.

I agree with the impression that Mark Lee seemed the sole founder. I was helping Mark Lee with some minor contributions at THINK in 2013, and Jacy didn't occur to me as one of the main contributors at the time. (Perhaps he was more involved with a specific THINK group, but not the overall organization?)

[Edit: I've now made some small additions to the post to better ensure readers do not get the impressions that you're worried about. The substantive content of the post remains the same, and I have not read any disagreements with it, though please let me know if there are any.]

I think I agree with essentially all of this, though I would have preferred if you gave this feedback when you were reading the draft because I would have worded my comments to ensure they don't give the impression you're worried about. I strongly agree with your guess that EA would probably have come to exist without Will and Toby, and I would extend that to a guess for any small group. Of course such guesses are very speculative.

I would also emphasize my agreement with the claim that the Oxford community played a large role than Felicifia or THINK, but I think EA's origins were broader and more diverse than most people think. My guess for Will and Toby's % of the hours put into "founding" it would be much lower than your 20%.

On the co-founder term, I think of founders as much broader than the founders of, say, a company. EA has been the result of many people's efforts, many of whom I think are ignored or diminished in some tellings of EA history. That being said, I want to emphasize that I think this was only on my website for a few weeks at most, and I removed it shortly after I first received negative feedback on it. I believe I also casually used the term elsewhere, and it was sometimes used by people in my bio description when introducing me as a speaker. Again, I haven't used it since 2019.

I emphasize Felicifia in my comments because that is where I have the most first- hand experience to contribute, its history hasn't been as publicized as others, and I worry that many (most?) people hearing these histories think the history of EA was more centralized in Oxford than it was, in my opinion.

I'm glad you shared this information, and I will try to improve and clarify the post asap

I think I agree with essentially all of this, though I would have preferred if you gave this feedback when you were reading the draft because I would have worded my comments to ensure they don't give the impression you're worried about.

If it seemed to you like I was raising different issues in the draft, then each to their own, I guess. But these concerns were what I had in mind when I wrote comments like the following:

> 2004–2008: Before I found other EAs

If you're starting with this, then you should probably include "my" in the title (or similar) because it's about your experience with EA, rather than just an impartial historical recount... you allocate about 1/3 of the word count to autobiographical content that is only loosely related to the early history of EA...

> In general, EA emerged as the convergence from 2008 to 2012 at least 4 distinct but overlapping communities

I think the "EA" name largely emerged from (4), and it's core institutions mostly from (4) with a bit of (2). You'd be on more solid ground if you said that the EA community - the major contributors - emerged from (1-4), or if you at least clarified this somehow.

[Edit: I've now made some small additions to the post to better ensure readers do not get the impressions that you're worried about. The substantive content of the post remains the same, and I have not read any disagreements with it, though please let me know if there are any.]

Thanks for clarifying. I see the connection between both sets of comments, but the draft comments still seem more like 'it might be confusing whether this is about your experience in EA or an even-coverage history', while the new comments seem more like 'it might give the impression that Felicifia utilitarians and LessWrong rationalists had a bigger role, that GWWC and 80k didn't have student groups, that EA wasn't selected as a name for CEA in 2011, and that you had as much influence in building EA as a as Will or Toby.' These seem meaningfully different, and while I adjusted for the former, I didn't adjust for the latter.

(Again, I will add some qualification as soon as I can, e.g., noting that there were other student groups, which I'm happy to note but just didn't because that is well-documented and not where I was personally most involved.)

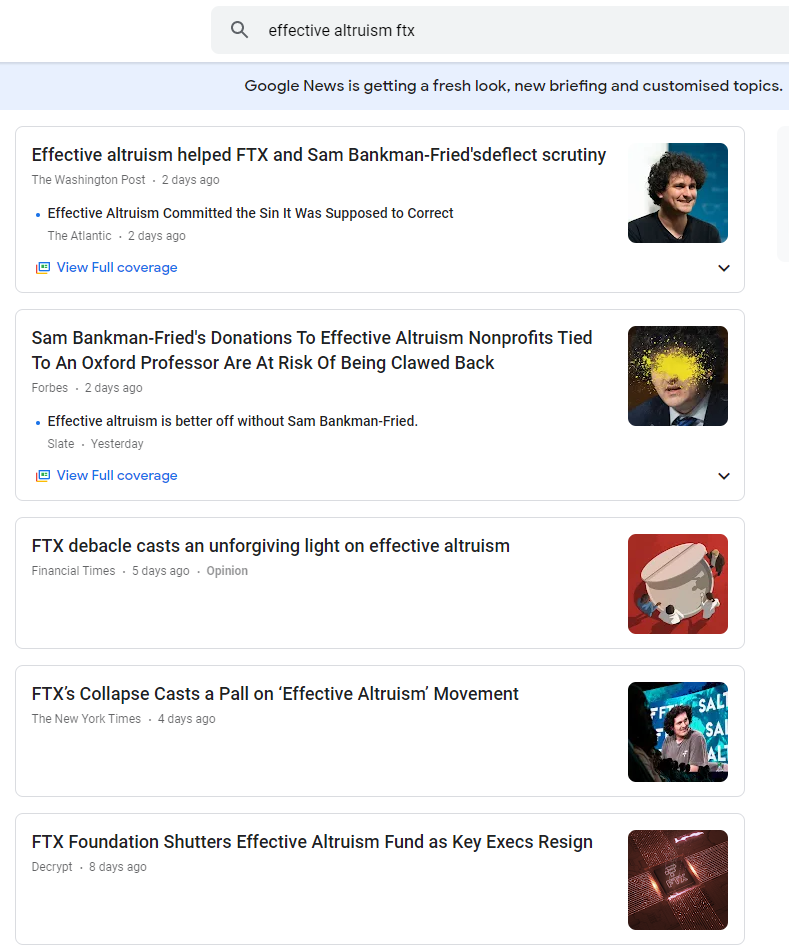

1. In the last two weeks, SBF has about about 2M views to his wikipedia page. This absolutely dwarfs the number of pageviews to any major EA previously.

2. Viewing the same graph on a logarithmic scale, we can see that even before the recent crisis, SBF was the best known EA. Second was Moskovitz, and roughly tied at third are Singer and Macaskill.

3. Since the scandal, many people will have heard about effective altruism, in a negative light. It has been accumulating pageviews at about 10x the normal rate. If pageviews are a good guide, then 2% of people who had heard about effective altruism ever would have heard about it in the last two weeks, through the FTX implosion.

4. Interest in "longtermism" has been only weakly affected by the FTX implosion, and "existential risk" not at all.

Given this and the fact that two books and a film are on the way, I think that "effective altruism" doesn't have any brand value anymore is more likely than not to lose all its brand value. Whereas "existential risk" is far enough removed that it is untainted by these events. "Longt... (read more)

It may be premature to conclude that EA doesn't have any brand value anymore, though the recent crisis has definitely been disastrous for the EA brand, and may justify rebranding.

This is excellent. I hadn't even thought to check. Though I think I disagree with the strength of your conclusion.

If you look back since 2015 ("all time"), it looks like this.[1] Keep in mind that Sam has 1.1M pageviews since before November[2] (before anyone could know anything about anything). Additionally, if you browse his Wikipedia page, EA is mentioned under "Careers", and is not something you read about unless particularly interested. On the graph, roughly 5% click through to EA. (Plus, most of the news-readers are likely to be in the US, so the damage is localised?)

A point of uncertainty for me is to what extent news outlets will drag out the story. Might be that most of the negative-association-pageviews are yet to come, but I suspect not? Idk.

Not sure how to make the prediction bettable but I think I'm significantly less pessimistic about the brand value than you seem to be.

Feel free to grab these if you want to make a post of it. Seems very usefwly calibrating.

1. ^

2. ^

6

Steven Byrnes

The implications for "brand value" would depend on whether people learn about "EA" as the perpetrator vs. victim. For example, I think there were charitable foundations that got screwed over by Bernie Madoff, and I imagine that their wiki articles would have also had a spike in views when that went down, but not in a bad way.

7

RyanCarey

I agree in principle, but I think EA shares some of the blame here - FTX's leadership group consisted of four EAs. It was founded for ETG reasons, with EA founders and with EA investment, by Sam, an act utilitarian, who had been a part of EA-aligned groups for >10 years, and with a foundation that included a lot of EA leadership, and whose activities consisted mostly of funding EAs.

3[anonymous]

I love that you've shared this data, but I disagree that this and the books/film suggest that EA is more likely than not to lose all its brand value (although I'm far from confident in that claim).

I don't have statistics, but my subjective impression is that most of the coverage of the FTX crisis that mentions EA so far has either treated us with sympathy or has been kind of neutral on EA. Then I think another significant fraction is mildly negative - the kind of coverage that puts off people who will probably never like us much anyway, and then of the remainder, puts off about half of them and actually attracts the rest (not because of the negative part, but because they read enough that they end up liking EA on balance, so the coverage just brings forward their introduction to EA). Obviously not all publicity is good publicity, but I think this kind of reasoning might be where the saying comes from.

Again, this is all pretty subjective and wishy-washy. I just wanted to push back a bit on jumping from "lots of media coverage associated with a bad thing" to "probably gonna lose all brand value."

I also argued here that causes in general look mildly negative to people outside of them. And my sense is that the FTX crisis won't have made the EA brand much worse than that overall.

Honestly even if that 2% was 50% I think I'd still be leaning in the 'the EA brand will survive' direction. And I think several years ago I would have said 100% (less sure now).

But it's still early days. Very plausible the FTX crisis could end up looking extremely bad for EA.

My impression is that the coverage of EA has been more negative than you suggest, even though I don't have hard data either. It could be useful to look into.

Hmm I'm probably not gonna read them all but from what I can see here I'd guess:

1. Negative

2. Seems kinda mixed - neutral?

3. Negative

4. Sympathetic but less sure on this one

5. Neutral/sympathetic

The NYT article isn't an opinion piece but a news article, and I guess that it's a bit less clear how to classify them. Potentially one should distinguish between news articles and opinion pieces. But in any event, I think that if someone who didn't know about EA before reads the NYT article, they're more likely to form a negative than a positive opinion.

Hmm, I suspect that anyone who had the potential to be bumped over the threshold for interest in EA, would be likely to view the EA Wikipedia article positively despite clicking through to it via SBF. Though I suspect there are a small number of people with the potential to be bumped over that threshold. I have around 10% probability on that the negative news has been positive for the movement, primarily because it gained exposure. Unlikely, but not beyond the realm of possibility. Oo

A case of precocious policy influence, and my pitch for more research on how to get a top policy job.

Last week Lina Khan was appointed as Chair of the FTC, at age 32! How did she get such an elite role? At age 11, she moved to the US from London. In 2014, she studied antitrust topics at the New America Foundation (centre-left think tank). Got a JD from Yale in 2017, and published work relevant to the emerging Hipster Antitrust movement at the same time. In 2018, she worked as a legal fellow at the FTC. In 2020, became an associate professor of law at Columbia. This year - 2021 - she was appointed by Biden.

The FTC chair role is an extraordinary level of success to reach at such a young age. But it kind-of makes sense that she should be able to get such a role: she has elite academic credentials that are highly relevant for the role, has riden the hipster antitrust wave, and has experience of and willingness to work in government.

I think biosec and AI policy EAs could try to emulate this. Specifically, they could try to gather some elite academic credentials, while also engaging with regulatory issues and working for regulators, or more broadly, in the executive branch of goverment. ... (read more)

What's especially interesting is that the one article that kick-started her career was, by truth-orientated standards, quite poor. For example, she suggested that Amazon was able to charge unprofitably low prices by selling equity/debt to raise more cash - but you only have to look at Amazon's accounts to see that they have been almost entirely self-financing for a long time. This is because Amazon has actually been cashflow positive, in contrast to the impression you would get from Khan's piece. (More detail on this and other problems here).

Depressingly this suggests to me that a good strategy for gaining political power is to pick a growing, popular movement, become an extreme advocate of it, and trust that people will simply ignore the logical problems with the position.

My impression is that a lot of her quick success was because her antitrust stuff tapped into progressive anti Big Tech sentiment. It's possible EAs could somehow fit into the biorisk zeitgeist but otherwise, I think it would take a lot of thought to figure out how an EA could replicate this.

Agreed that in her outlying case, most of what she's done is tap into a political movement in ways we'd prefer not to. But is that true for high-performers generally? I'd hypothesise that elite academic credentials + policy-relevant research + willingness to be political, is enough to get people into elite political positions, maybe a tier lower than hers, a decade later, but it'd be worth knowing how all the variables in these different cases contribute.

Putting things in perspective: what is and isn't the FTX crisis, for EA?

In thinking about the effect of the FTX crisis on EA, it's easy to fixate on one aspect that is really severely damaged, and then to doomscroll about that, or conversely to focus on an aspect that is more lightly affected, and therefore to think all will be fine across the board. Instead, we should realise that both of these things can be true for different facets of EA. So in this comment, I'll now list some important things that are, in my opinion, badly damaged, and some that aren't, or that might not be.

What in EA is badly damaged:

The brand “effective altruism”, and maybe to an unrecoverable extent (but note that most new projects have not been naming themselves after EA anyway.)

The publishability of research on effective altruism (philosophers are now more sceptical about it).

The “innocence” of EA (EAs appear to have defrauded ~4x what they ever donated). EA, in whatever capacity it continues to exist, will be harshly criticised for this, as it should be, and will have to be much more thick-skinned in future.

The amount of goodwill among promoters of EA (they have lost funds on FTX, regranters have been emb

I also can't think of a bigger scandal in the 223-year history of utilitarianism

I feel like there's been a lot here, though not as "one sudden shock".

Utilitarianism being a key strain of thought in early England, which went on to colonize the world and do many questionable things. I'm not sure how close that link is, but it's easy to imagine a lot of things (just by it being such a big deal).

Not exactly the same, but I believe that a lot of "enlightenment thinking" and similar got a ton of heat. The French Revolution, for example. Much later, arguably, a lot of WW1 and WW2 can be blamed on some of this thinking (atheism too, of course). I think the counter-enlightenment and similar jump on this.

Peter Singer got into a ton of heat for his utilitarian beliefs (though of course, the stakes were smaller)

Really, every large ideology I could think of have some pretty massive scandals associated with it. The political left, political right, lots of stuff. FTX is take compared to a lot of that. (Still really bad of course).

I think the FTX stuff a bigger deal than Peter Singer's views on disability, and for me to be convinced about the England and enlightenment examples, you'd have to draw a clearer line between the philosophy and the wrongful actions (cf. in the FTX case, we have a self-identified utilitarian doing various wrongs for stated utilitarian reasons).

I agree that every large ideology has had massive scandals, in some cases ranging up to purges, famines, wars, etc. I think the problem for us, though, is that there aren't very many people who take utilitarianism or beneficentrism seriously as an action-guiding principle - there are only ~10k effective altruists, basically. What happens if you scale that up to 100k and beyond? My claim would be that we need to tweak the product before we scale it, in order to make sure these catastrophes don't scale with the size of the movement.

That's an interesting take. I think that for me, it doesn't feel that way, but this would be a long discussion, and my guess is that we both probably have fairly deep and different intuitions on this.

(Also, honestly, it seems like only a handful of people are really in charge of community growth decisions to me, and I don't think I could do much to change direction there anyway, so I'm less focused on trying to change that)

4[anonymous]

What makes you say this? Anything public you can point to?

Translating EA into Republican. There are dozens of EAs in US party politics, Vox, the Obama admin, Google, and Facebook. Hardly in the Republican party, working for WSJ, appointed for Trump, or working for Palantir. A dozen community groups in places like NYC, SF, Seattle, Berkeley, Stanford, Harvard, Yale. But none in Dallas, Phoenix, Miami, the US Naval Laboratory, the Westpoint Military Academy, etc - the libertarian-leaning GMU economics department being a sole possible exception.

This is despite the fact that people passing through military academies would be disproportionately more likely to work on technological dangers in the military and public service, while the ease of competitiveness is less than more liberal colleges.

I'm coming to the view that similarly to the serious effort to rework EA ideas to align with Chinese politics and culture, we need to translate EA into Republican, and that this should be a multi-year, multi-person project.

I thought this Astral Codex Ten post, explaining how the GOP could benefit from integrating some EA-aligned ideas like prediction markets into its platform, was really interesting. Karl Rove retweeted it here. I don't know how well an anti-classism message would align with EA in its current form though, if Habryka is right that EA is currently "too prestige-seeking".

I've thought about this a few times since you wrote it, and I'd like to see what others think. Would you consider making it a top-level post (with or without any additional detail)?

1

Nathan Young

Maybe shortform posts could graduate to being normal posts if they get some number of upvotes?

6

Aaron Gertler 🔸

When someone writes a shortform post, they often intend for it to be less visible. I don't want an automated feature that will often go against the intentions of a post's author.

1

Nathan Young

Do you think they intend for less visibility or to signal it's a lower standard?

2

Aaron Gertler 🔸

Could be one, the other, neither, or both. But my point is that an automated feature that removes Shortform status erases those differences.

Here are five ideas, each of which I suspect could improve the flow of EA talent by at least a few percent.

1. A top math professor who takes on students in alignment-relevant topics

A few years ago, this was imperative in CS. Now we have some AIS professors in CS, and a couple in stats, but none in pure math. But some students obsessed with pure math, and interested in AIS, are very bright, yet don't want to drop out of their PhDs. Thus having a top professor could be a good way to catch these people.

2. A new university that could hire people as professors

Because some academics don't want to leave academia.

3. A recruitment ground for politicians. This could involve top law and policy schools, and would be not be explicitly EA -branded.

Because we need more good candidates to support. And some distance between EAs and the politicians we support could help with both epistemic and reputational contamination.

4. Mass scholarships for undergrads at non-elite, non-US high-schools/undergrad, based on testing. This could award thousands of scholarships per year.

A lot of top scientists study undergrad in their own country, so it would make sense to either fund them... (read more)

A new EA university would also be useful for co-supervising PhDs for researchers at EA nonprofits, analogously to how other universities have to participate in order for researchers to acquire a PhD on-the-job in industry.

Sam Bankman Fried (~$25B) is currently estimated to be about twice as rich as Dustin Moskovitz (~$13B). The rest of committed EA money is <$10B, so SBF and colleagues constitute close-to, if not half of all EA funds. I don't think people have fully reoriented toward this reality. For example, we should care more about top talent going to the FTX Foundation, and worry less if OpenPhil won't fund a pet project.

Obviously, crypto is volatile, so this may change!

An adjustment:

https://www.ndtv.com/world-news/elon-musk-igor-kurganov-where-did-elon-musks-5-7-billion-mystery-donation-go-2771003

9

RyanCarey

I think Elon is currently giving less, and less effectively than the donors I mentioned are (or that I expect Sam to, based on his pledges and so on) - the $5B may be to his own foundation/DAF. But I agree that he could quickly become highly significant by changing one or both of those things.

Getting advice on a job decision, efficiently (five steps)

When using EA considerations to decide between job offers, asking for help is often a good idea, even if those who could provide advice are busy, and their time is valued. This is because advisors can spend minutes of their time to guide years of yours. It's not disrespecting their "valuable" time, if you do it right. I've had some experience as an advisor, both and as an advisee, and I think a safe bet is to follow the following several steps:

Make sure you actually have a decision that is will concretely guide months to years of your time, i.e. ask about which offer to take, not which company to apply for.

Distill the pros and cons, and neutral attributes of each option down to page or two of text, in a format that permits inline comments (ideally a GDoc). Specifically:

To begin with, give a rough characterization of each option, describing it in neutral terms.

Do mention non-EA considerations e.g. location preferences, alongside EA-related ones.

Remove duplicates. If something is listed as a "pro" for option A, it need not also be listed as a "con" for option B. This helps with conciseness and helps avoid arbitrary double-coun

Would it be more intuitive to do your 3-way comparison the other way around - list the pros and cons of each option relative to FHI, rather than of FHI relative to each alternative?

2

RyanCarey

I agree that's better. If I turn this into a proper post, I'll fix the example.

3

Johannes_Treutlein

Thanks for this meta-advice! Will try to adhere to it when asking for advice the next time :)

People often tell me that they encountered EA because they were Googling "How do I choose where to donate?", "How do I choose a high-impact career?" and so on. Has anyone considered writing up answers to these topics as WikiHow instructionals? It seems like it could attract a pretty good amount of traffic to EA research and the EA community in general.

Some titles might change soon in case you can't find them anymore (e.g., How to Reduce Animal Cruelty in Your Diet --> How to Have a More Ethical Diet Towards Animals, and How to Help Save a Child's Life with a Malaria Bed Net Donation --> How to Help Save a Child's Life from Malaria).

I'm interested in funding someone with a reasonable track record to work on this (if WikiHow permits funding). You can submit a very quick-and-dirty funding application here.

Have you had any bites on this project yet? I just had the misfortune of encountering the WikiHow entries for "How to Choose a Charity to Support" and "How to Donate to Charities Wisely", and "How to Improve the Lives of the Poor", which naturally have no mention of anything remotely EA-adjacent (like considering the impact/effectiveness of your donations or donating to health interventions in poor countries), instead featuring gems like:

* "Do an inventory of what's important to you.... Maybe you remember having the music program canceled at your school as a child."

* A three-way breakdown of ways to donate to charity including "donate money", "donate time", and then the incongruously specific/macabre "Donate blood or organs."

* I did appreciate the off-hand mention that "putting a student through grade school in the United States can cost upwards of $100,000. In some developing countries, you can save about 30 lives for the same amount," which hilariously is not followed up on whatsoever by the article.

* A truly obsessive focus on looking up charities' detailed tax records and contacting them, etc, to make sure they are not literal scams.

I'm not sure that creating new Wikihow entries about donations (or career choice) will be super high-impact. We'll be competing with the existing articles, which aren't going to go away just because we write our own. And doesn't everybody know that WikiHow is not a reliable source of good advice -- at least everybody from the smart, young, ambitious demographic that EA is most eager to target? Still, it would be easy to produce some wikihow articles just by copy-pasting and lightly reformatting existing intro-to-EA content. I think I'm a little to busy to do this project myself right now, but if you haven't yet had any applications I could try to spread the word a bit.

No applications yet. In general, we rarely get the applications we ask/hope for; a reasonable default assumption is that nobody has been doing anything.

To me that sounds like a project that could be listed on https://www.eawork.club/ . I once listed to translate the German Wikipedia article for Bovine Meat and Milk Factors into English cause I did not have the rights to do it. A day later somebody had it done. And in the meanwhile somebody apparently translated it to Chinese.

Running a literal school would be awesome, but seems too consuming of time and organisational resources to do right now.Assuming we did want to do that eventually, what could be a suitable smaller step? Founding an organisation with vetted staff, working full-time on promoting analytical and altruistic thinking to high-schoolers - professionalising in this way increases the safety and reputability of these programs. Its activities should be targeted to top schools, and could include, in increasing order of duration:

One-off outreach talks at top schools

Summer programs in more countries, and in more subjects, and with more of an altruistic bent (i.e. variations on SPARC and Eurosparc)

Recurring classes in things like philosophy, econ, and EA. Teaching by visitors could be arranged by liaising to school teachers, similarly to how external teachers are brought in for chess classes.

After-school, or weekend, programs for interested students

I'm not confident this would go well, given the various reports from Catherine's recap and Buck's further theorising. But targeting the right students, and bri... (read more)

After hearing about his defrauding FTX, like everyone else, I wondered why he did it. I haven't met Sam in over five years, but one thing that I can do is take a look at his old Felicifia comments. At that time, back in 2012, Sam identified as an act utilitarian, and said that he would only follow rules (such as abstaining from theft) only if and when there was a real risk of getting caught. You can see this in the following pair of quotes.

I'm not sure I understand what the paradox is here. Fundamentally if you are going to donate the money to THL and he's going to buy lots of cigarettes with it it's clearly in an act utilitarian's interest to keep the money as long as this doesn't have consequences down the road, so you won't actually give it to him if he drives you. He might predict this and thus not give you the ride, but then your mistake was letting Paul know that you're an act utilitarian, not in being one. Perhaps this was because you've done this before, but then not giving him money the previous time was possibly not the correct decision according to act utilitarianism, because

Wow, I guess he didn't pay heed to his own advice here then!

I don't think this is that unlikely. He came across as a deluded megalomaniac in the chat with Kelsey (like even now he thinks there's a decent chance he can make things right!)

Longtermist EA seems relatively strong at thinking about how to do good, and raising funds for doing so, but relatively weak in affector organs, that tell us what's going on in the world, and effector organs that influence the world. Three examples of ways that EAs can actually influence behaviour are:

- working in & advising US nat sec

- working in UK & EU governments, in regulation

- working in & advising AI companies

But I expect this is not enough, and our (a/e)ffector organs are bottlenecking our impact. To be clear, it's not that these roles aren't mentally stimulating - they are. It's just that their impact lies primarily in implementing ideas, and uncovering practical considerations, rather than in an Ivory tower's pure, deep thinking.

The world is quickly becoming polarised between US and China, and this means that certain (a/e)ffector organs may be even more neglected than the others. We may want to promote: i) work as a diplomat ii) working at diplomat-adjacent think tanks, such as the Asia Society, iii) working at relevant UN bodies, relating to disarmament and bioweapon control, iv) working at UN... (read more)

This framing is not quite right, because it implies that there's a clean division of labour between thinkers and doers. A better claim would be: "we have a bunch of thinkers, now we need a bunch of thinker-doers".

7

RyanCarey

There's a new center in the Department of State, dedicated to the diplomacy surrounding new and emerging tech. This seems like great place for Americans to go and work, if they're interested in arms control in relation to AI and emerging technology.

Confusingly, it's called the "Bureau of Cyberspace Security and Emerging Technologies (CSET)". So we now have to distinguish the State CSET from the Georgetown one - the "Centre for Security and Emerging Technology".

5

MichaelA🔸

Thanks for this.

I've also been thinking about similar things - e.g. about how there might be a lot of useful things EAs could do in diplomatic roles, and how an 80k career profile on diplomatic roles could be useful. This has partly been sparked by thinking about nuclear risk.

Hopefully in the coming months I'll write up some relevant thoughts of my own on this and talk to some people. And this shortform post has given me a little extra boost of inclination to do so.

A key priority for the Biden administration should be to rebuild the State Department's arms control workforce, as its current workforce is ageing and there have been struggles with recruiting and retaining younger talent

Another key priority should be "responding to the growing anti-satellite threat to U.S. and allies’ space systems". This should be tackled by, among other things:

"tak[ing] steps to revitalize America’s space security diplomacy"

"consider[ing] ways to expand space security consultations with allies and partners, and promote norms of behavior that can advance the security and sustainability of the outer space environment"

(Note: It's not totally clear to me whether this part of the article is solely about anti-satellite threats or about a broader range of space-related issues.)

This updated me a little bit further towards thinking it might be useful:

for more EAs to go into diplomacy and/or arms control

for EAs to do more to support other efforts to improve diplomacy and/or arms control (e.g., via directing funding to good existing work on these fronts)

Here's the part of the article which... (read more)

The typical view, here, on high-school outreach seems to be that:

High-school outreach has been somewhat effective, uncovering one highly capable do-gooder per 10-100 exceptional students.

But people aren't treating it with the requisite degree of sensitivity: they don't think enough about what parents think, they talk about "converting people", and there have been bad events of unprofessional behaviour.

So I think high-school outreach should be done, but done differently. Involving some teachers would be useful step toward professionalisation (separating the outreach from the rationalist community would be another).

But (1) also suggests that teaching at a school for gifted children could be a priority activity in itself. The argument is if a teacher can inspire a bright student to try to do good in their career, then the student might be manifold more effective than the teacher themselves would have been, if they had tried to work directly on the world's problems. And students at such schools are exceptional enough (Z>2) that this could happen many ... (read more)

A step that I think would be good to see even sooner is any professor at a top school getting in a habit of giving talks at gifted high-schools. At some point, it might be worth a few professors each giving dozens of talks per year, although it wouldn't have to start that way.

Edit: or maybe just people with "cool" jobs. Poker players? Athletes?

Has anyone else noticed that the EA Forum moderation is quite intense of late?

Back in 2014, I'd proposed quite limited criteria for moderation: "spam, abuse, guilt-trips, socially or ecologically destructive destructive advocacy". I'd said then: "Largely, I expect to be able to stay out of users' way!" But my impression is that the moderators have at some point after 2017 taken to advising and sanction users based on their tone, for example, here (Halstead being "warned" for unsubstantiated true comments), "rudeness" and "Other behavior that interferes with good discourse" being criteria for content deletion. Generally I get the impression that we need more, not less, people directly speaking harsh truths, and that it's rarely useful for a moderator to insert themselves into such conversation, given that we already have other remedies: judging a user's reputation, counterarguing, or voting up and down. Overall, I'd go as far as to conjecture that if moderators did 50% less (by continuing to delete spam, but standing down in the less clear-cut cases) the forum would be better off.

Do we have any statistics on the number of moderator actions per year?

Speaking as the lead moderator, I feel as though we really don’t make all that many visible “warning” comments (though of course, "all that many" is in the eye of the beholder).

I do think we’ve increased the number of public comments we make, but this is partly due to a move toward public rather than private comments in cases where we want to emphasize the existence of a given rule or norm. We send fewer private messages than we used to (comparing the last 12 months to the 18 months before that).

Since the new Forum was launched at the end of 2018, moderator actions (aside from deleting spam, approving posts, and other “infrastructure”) have included:

rafa_fanboy (three months, from February - May 2019, for a pattern of low-quality comments that often didn’t engage with the relevant post)

26 private messages sent to users to alert them that their activity was either violating or close to violating the Forum’s rules. To roughly group by category, there were:

7 messages about rude/insulting language

13 messages about posts or comments that either:

Had no apparent connection to effective altruism, or

I generally think more moderation is good, but have also pushed back on a number of specific moderation decisions. In general I think we need more moderation of the type "this user seems like they are reliably making low-quality contributions that don't meet our bar" and less moderation of the type "this was rude/impolite but it's content was good", of which there have been a few recently.

Yeah, I'd revise my view to: moderation seems too stringent on the particular axis of politeness/rudeness. I don't really have any considered view on other axes.

9

Ramiro

You're a pretty good moderator.

Do you think some sort of periodic & public "moderation report" (like the summary above) would be convenient?

5

Aaron Gertler 🔸

Thanks!

I doubt that the stats I shared above are especially useful to share regularly (in a given quarter, we might send two or three messages). But it does seem convenient for people to be able to easily find public moderator comments.

In the course of writing the previous comment, I added the "moderator comment" designation to all the comments that applied. I'll talk to our tech team about whether there's a good way to show all the moderator comments on one page or something like that.

7

RyanCarey

Thanks, this detailed response reassures me that the moderation is not way too interventionist, and it also sounds positive to me that the moderation is becoming a bit more public, and less frequent.

6

IanDavidMoss

I actually think you are an unusually skilled moderator, FWIW.

Could it be useful for moderators to take into account the amount of karma / votes a statement receives?

I'm no expert here, and I just took a bunch of minutes to get an idea of the whole discussion - but I guess that's more than most people who will have contact with it. So it's not the best assessment of the situation, but maybe you should take it as evidence of what it'd look like for an outsider or the average reader.

In Halstead's case, the warning sounds even positive:

I think Aaron was painstakingly trying to follow moderation norms in this case; otherwise, moderators would risk having people accuse them of taking sides. I contrast it with Sean's comments, which were more targeted and catalysed Phil's replies, and ultimately led to the latter being banned; but Sean disclosed evidence for his statements, and consequently was not warned.

3

Aaron Gertler 🔸

(Sharing my personal views as a moderator, not speaking for the whole team.)

See my response to Larks on this:

Even if we make a point to acknowledge how useful a contribution might have been, or how much we respect the contributor, I don't want that to affect whether we interpret it as having violated the rules. We can moderate kindly, but we should still moderate.

People who do AI safety research sometimes worry that their research could also contribute to AI capabilities, thereby hastening a possible AI safety disaster. But when might this be a reasonable concern?

We can model a researcher i as contributing intellectual resources of si to safety, and ci to capabilities, both real numbers. We let the total safety investment (of all researchers) be s=∑isi, and the capabilities investment be c=∑ici. Then, we assume that a good outcome is achieved if s>c/k, for some constant k, and a bad outcome otherwise.

The assumption about s>b/k could be justified by safety and capabilities research having diminishing return. Then you could have log-uniform beliefs (over some interval) about the level of capabilities c′ required to achieve AGI, and the amount of safety research c′/k required for a good outcome. Within the support of c′ and c′/k, linearly increasing s/c, will linearly increase the chance of safe AGI.

In this model, having a positive marginal impact doesn't require us to completely abstain ... (read more)

I propose an adjustment to this model: you have to be greater than the rest of the world's total contributions over time under the action-relevant probability measure. What I mean by action-relevant measure is the probability distribution where worlds are weighted according to your expected impact, not just their probability.

So if you think there's a decent chance that we're barely going to solve alignment, and that in those worlds the world will pivot towards a much higher safety focus, you should be more cautious about contributing to capabilities.

2

Ozzie Gooen

Interesting take, quick notes:

1) I worked on a similar model with Justin Shovelain a few years back. See: https://www.lesswrong.com/posts/BfKQGYJBwdHfik4Kd/fai-research-constraints-and-agi-side-effects

2) Rather, one's impact is positive if the ratio of safety and capabilities contributions si/ci is greater than the average of the rest of the world.

I haven't quite followed your model, but this doesn't see exactly correct to me. For example, if the mean player is essentially "causing a lot of net-harm", then "just causing a bit of net-harm", clearly isn't a net-good.

1

Agrippa

It seems entirely possible that even with a 100 safety to 1 capabilities researcher ratio, 100 capabilities researchers could kill everyone before the 10k safety researchers came up with a plan that didnt kill everyone. It does not seem like a symmetric race.

Likewise, if the output of safety research is just "this is not safe to do" (as MIRI's seems to be), capabilities will continue, or in fact they will do MORE capabilities work so they can upskill and "help" with the safety problem.

Which longtermist hubs do we most need? (see also: Hacking Academia)

Suppose longtermism already has some presence in SF, Oxford, DC, London, Toronto, Melbourne, Boston, New York, and is already trying to boost its presence in the EU (especially Brussels, Paris, Berlin), UN (NYC, Geneva), and China (Beijing, ...). Which other cities are important?

I think there's a case for New Delhi, as the capital of India. It's the third-largest country by GDP (PPP), soon-to-be the most populous country, high-growth, and a neighbour of China. Perhaps we're neglecting it due to founder effects, because it has lower average wealth, because it's universities aren't thriving, and/or because it currently has a nationalist government.

I also see a case for Singapore - that it's government and universities could be a place from which to work on de-escalating US-China tensions. It's physically and culturally not far from China. As a city-state, it benefits a lot from peace and global trade. It's by far the most-developed member of ASEAN, which is also large, mostly neutral, and benefits from peace. It's generally very technocratic with high historical growth, and is also the HQ of APEC.

Jakarta - yep, it's also ASEAN's HQ. Worth noting, though, that Indonesia is moving its capital out of Jakarta.

3

Max_Daniel

Yes, good point! My idle speculations have also made me wonder about Indonesia at least once.

4

Jonas_

PPP-adjusted GDP seems less geopolitically relevant than nominal GDP, here's a nominal GDP table based on the same 2017 PwC report (source), the results are broadly similar:

9

Prabhat Soni

I'd be curious to discuss if there's a case for Moscow. 80,000 Hours's lists being a Russia or India specialist under "Other paths we're excited about". The case would probably revolve around Russia's huge nuclear arsenal and efforts to build AI. If climate change were to become really bad (say 4 degrees+ warming), Russia (along with Canada and New Zealand) would become the new hub for immigration given it's geography -- and this alone could make it one of the most influential countries in the world.

We tend to think that AI x-risk is mostly from accidents because well, few people are omnicidal, and alignment is hard, so an accident is more likely. We tend to think that in bio, on the other hand, it would be very hard for a natural or accidental event to cause the extinction of all humanity. But the arguments we use for AI ought to also imply that the risks from intentional use of biotech are quite slim.

We can state this argument more formally using three premises:

The risk of accidental bio-x-catastrophe is much lower than that of non-accidental x-catastrophe.

A non-accidental AI x-catastrophe is at least as likely as a non-accidental bio x-catastrophe.

>90% of AI x-risk comes from an accident.

It follows from (1-3) that x-risk from AI is >10x larger than that of biotech. We ought to believe that (1) and (3) are true for reasons given in the first paragraph. (2) is, in my opinion, a topic too fraught with infohazards to be fit for public debate. That said, it seems plausible due to AI being generally more powerful than biotech. So I lean toward thinking the conclusion is correct.

In The Precipice, the risk from AI was rated as merely 3x greater. But if the difference is >10x, then almost all longtermists who are not much more competent in bio than in AI should prefer to work on AIS.

I like this approach, even though I'm unsure of what to conclude from it. In particular, I like the introduction of the accident vs non-accident distinction. It's hard to get an intuition of what the relative chances of a bio-x-catastrophe and an AI-x-catastrophe are. It's easier to have intuitions about the relative chances of:

Accidental vs non-accidental bio-x-catastrophes

Non-accidental AI-x-catastrophes vs non-accidental bio-x-catastrophes

Accidental vs non-accidental AI-x-catastrophes

That's what you're making use of in this post. Regardless of what one thinks of the conclusion, the methodology is interesting.

Note that premise 2 strongly depends on the probability of crazy AI being developed in the relevant time period.

3

Stefan_Schubert

Yeah, I think it would be good to introduce premisses relating to the time that AI and bio capabilities that could cause an x-catastrophe ("crazy AI" and "crazy bio") will be developed. To elaborate on a (protected) tweet of Daniel's.

Suppose that you have as long timelines for crazy AI and for crazy bio, but that you are uncertain about them, and that they're uncorrelated, in your view.

Suppose also that we modify 2 into "a non-accidental AI x-catastrophe is at least as likely as a non-accidental bio x-catastrophe, conditional on there existing both crazy AI and crazy bio, and conditional on there being no other x-catastrophe". (I think that captures the spirit of Ryan's version of 2.)

Suppose also that you think that the chance that in the world where crazy AI gets developed first, there is a 90% chance of an accidental AI x-catastrophe, and that in 50% of the worlds where there isn't an accidental x-catastrophe, there is a non-accidental AI x-catastrophe - meaning the overall risk is 95% (in line with 3). In the world where crazy bio is rather developed first, there is a 50% chance of an accidental x-catastrophe (by the modified version of 2), plus some chance of a non-accidental x-catastrophe , meaning the overall risk is a bit more than 50%.

Regarding the timelines of the technologies, one way of thinking would be to say that there is a 50/50 chance that we get AI or bio first, meaning there is a 49.5% chance of an AI x-catastrophe and a >25% chance of a bio x-catastrophe (plus additional small probabilities of the slower crazy technology killing us in the worlds where we survive the first one; but let's ignore that for now). That would mean that the ratio of AI x-risk to bio x-risk is more like 2:1. However, one might also think that there is a significant number of worlds where both technologies are developed at the same time, in the relevant sense - and your original argument potentially could be used as it is regarding those worlds. If so, that would i

Certain opportunities are much more attractive to the impact-minded than to regular academics, and so may be attractive, relative to how competitive they are.

The secure nature of EA funding means that tenure is less important (although of course it's still good).

Some centers do research on EA-related topics, and are therefore more attractive, such as Oxford, GMU.

Universities in or near capital cities, such as Georgetown, UMD College Park, ANU, Ghent, Tsinghua or near other political centers such as NYC, Geneva may offer a perch from which to provide policy input.

Those doing interdisciplinary work may want to apply for a department that's strong in a field other than their own. For example, people working in AI ethics may benefit from centers that are great at AI, even if they're weak in philosophy.

Certain universities may be more attractive due to being in an EA hub, such as Berkeley, Oxford, UCL, UMD College Park, etc.

Thinking about an academic career in this way makes me think more people should pursue tenure at UMD, Georgetown, and Johns Hopkins (good for both biosecurity and causal models of AI), than I thought beforehand.

An organiser from Stanford EA asked me today how community building grants could be made more attractive. I have two reactions:

Specialised career pathways. To the extent that this can be done without compromising effectiveness, community-builders should be allowed to build field-specialisations, rather than just geographic ones. Currently, community-builders might hope to work at general outreach orgs like CEA and 80k. But general orgs will only offer so many jobs. Casting the net a bit wider, many activities of Forethought Foundation, SERI, LPP, and FLI are field-specific outreach. If community-builders take on some semi-specialised kinds of work in AI, or policy, or econ, (in connection with these orgs or independently) then this would aid their prospects of working for such orgs or returning to a more mainstream pathway.

"Owning it".To the extent that community building does not offer a specialised career pathway, the fact that it's a bold move should be incorporated into the branding. The Thiel Fellowship offers $100k to ~2 dozen students per year, to drop out of their programs to work on a startup that might change the world. Not

How the Haste Consideration turned out to be wrong.

In The haste consideration, Matt Wage essentially argued that given exponential movement growth, recruiting someone is very important, and that in particular, it’s important to do it sooner rather than later. After the passage of nine years, noone in the EA movement seems to believes it anymore, but it feels useful to recap what I view as the three main reasons why:

Exponential-looking movement growth will (almost certainly) level off eventually, once the ideas reach the susceptible population. So earlier outreach really only causes the movement to reach its full size at an earlier point. This has been learned from experience, as movement growth was north of 50% around 2010, but has since tapered to around 10% per year as of 2018-2020. And I’ve seen similar patterns in the AI safety field.

When you recruit someone, they may do what you want initially. But over time, your ideas about how to act may change, and they may not update with you. This has been seen in practice in the EA movement, which was highly intellectual and designed around values, rather than particular actions. People were reminded that their role is to help answer a

I have a few thoughts here, but my most important one is that your (2), as phrased, is an argument in favour of outreach, not against it. If you update towards a much better way of doing good, and any significant fraction of the people you 'recruit' update with you, you presumably did much more good via recruitment than via direct work.

Put another way, recruitment defers to question of how to do good into the future, and is therefore particularly valuable if we think our ideas are going to change/improve particularly fast. By contrast, recruitment (or deferring to the future in general) is less valuable when you 'have it all figured out'; you might just want to 'get on with it' at that point.

***

It might be easier to see with an illustrated example: