Holding powerful people accountable.

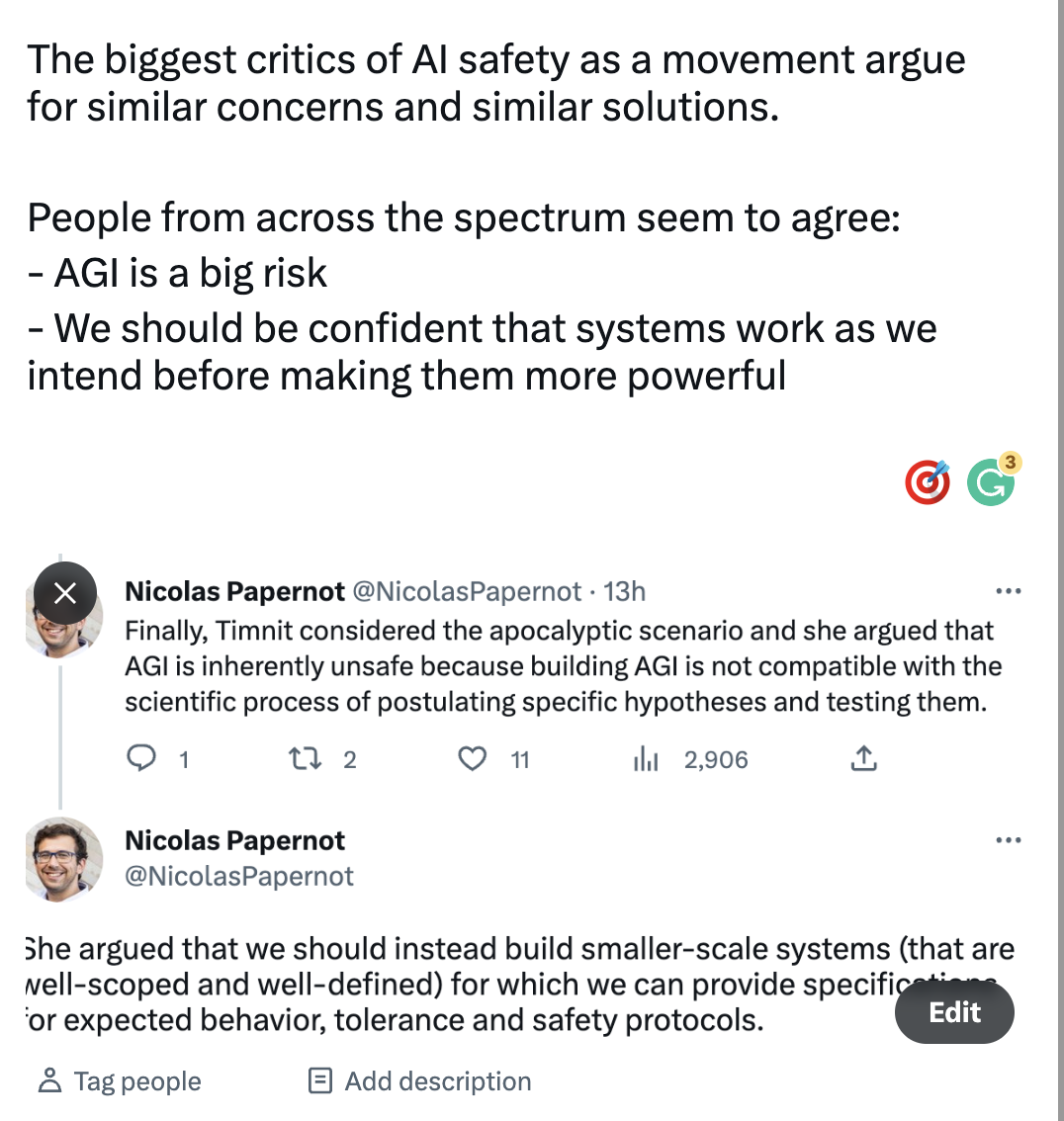

Reposted from a twitter thread.

I have made a number of prediction markets holding powerful people accountable[1]. Powerful people (and their friends) really can exert a lot of pressure with an angry email or dm (n = 2-5). If you are powerful, please consider how big your muscles are before you give pushback

I have quite thick skin, but I don't know whether such people are going around dming everyone like this. Likewise, this is a flaw I sometimes have and I have learned to be very light tough on pushback to non-frie... (read more)

I note that in some sense I have lost trust that the EA community gives me a clear prioritisation of where to donate.

Some clearer statements:

- I still think GiveWell does great work

- I still generally respect the funding decisions of Open Philanthropy

- I still think this forum has a higher standard than most place

- It is hard to know exactly how high impact animal welfare funding opportunities interact with x-risk ones

- I don't know what the general consensus on the most impactful x-risk funding opportunities are

- I don't really know what orgs do all-considered work on this topic. I guess the LTFF?

- I am more confused/inattentive and this community is covering a larger set of possible choices so it's harder to track what consensus is

Since it looks like you're looking for an opinion, here's mine:

To start, while I deeply respect GiveWell's work, in my personal opinion I still find it hard to believe that any GiveWell top charity is worth donating to if you're planning to do the typical EA project of maximizing the value of your donations in a scope sensitive and impartial way. ...Additionally, I don't think other x-risks matter nearly as much as AI risk work (though admittedly a lot of biorisk stuff is now focused on AI-bio intersections).

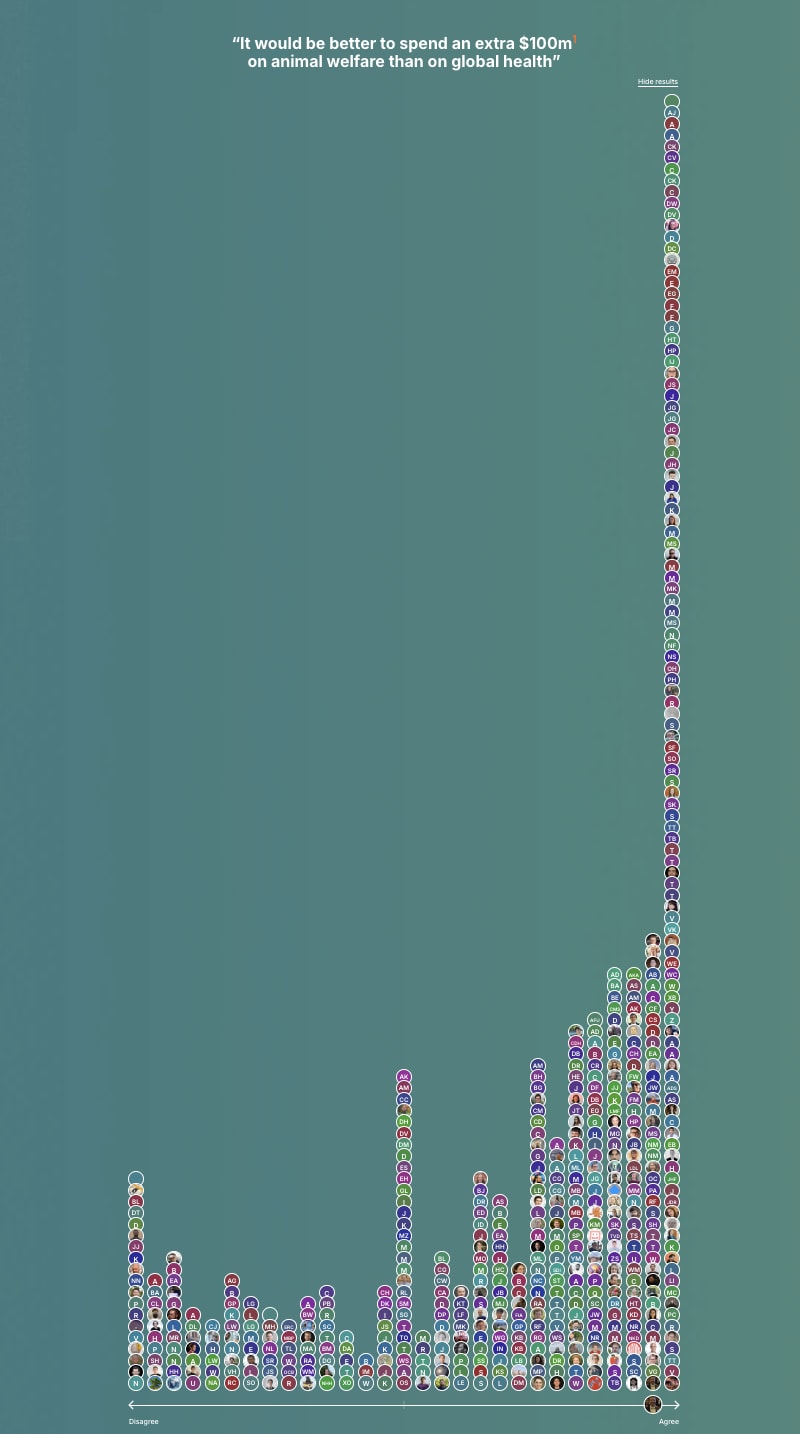

Instead, I think the main difficult judgement call in EA cause prioritization right now is "neglected animals" (eg invertebrates, wild animals) versus AI risk reduction.

AFAICT this also seems to be somewhat close to the overall view of the EA Forum as well as you can see in some of the debate weeks (animals smashed humans) and the Donation Election (where neglected animal orgs were all in the top, followed by PauseAI).

This comparison is made especially difficult because OP funds a lot of AI but not any of the neglected animal stuff, which subjects the AI work to significantly more diminished marginal returns.

To be clear, AI orgs still do need money. I think there's a vibe that ... (read more)

I think it's normal, and even good that the EA community doesn't have a clear prioritization of where to donate. People have different values and different beliefs, and so prioritize donations to different projects.

It is hard to know exactly how high impact animal welfare funding opportunities interact with x-risk ones

What do you mean? I don't understand how animal welfare campaigns interact with x-risks, except for reducing the risk of future pandemics, but I don't think that's what you had in mind (and even then, I don't think those are the kinds of pandemics that x-risk minded people worry about)

I don't know what the general consensus on the most impactful x-risk funding opportunities are

It seems clear to me that there is no general consensus, and some of the most vocal groups are actively fighting against each other.

I don't really know what orgs do all-considered work on this topic. I guess the LTFF?

You can see Giving What We Can recommendations for global catrastrophic risk reduction on this page[1] (i.e. there's also Longview's Emerging Challenges Fund). Many other orgs and foundations work on x-risk reduction, e.g. Open Philanthropy.

... (read more)I am more confused/inattentive and th

I feel like I want 80k to do more cause prioritisation if they are gonna direct so many people. Seems like 5 years ago they had their whole ranking thing which was easy to check. Now I am less confident in the quality of work that is directing lots of people in a certain direction.

Idk, many of the people they are directing would just do something kinda random which an 80k rec easily beats. I'd guess the number of people for whom 80k makes their plans worse in an absolute sense is kind of low and those people are likely to course correct.

Otoh, I do think people/orgs in general should consider doing more strategy/cause prio research, and if 80k were like "we want to triple the size of our research team to work out the ideal marginal talent allocation across longtermist interventions" that seems extremely exciting to me. But I don't think 80k are currently being irresponsible (not that you explicitly said that, for some reason I got a bit of that vibe from your post).

Compare giving what we can's page which has good branding and simple language. IMO 80,000 hours page has too much text and too much going on front page. Bring both websites up on your phone and judge for yourself.

My understanding is that 80k have done a bunch of A/B testing which suggested their current design outcompetes ~most others (presumably in terms of click-throughs / amount of time users spend on key pages).

You might not like it, but this is what peak performance looks like.

Have your EA conflicts on... THE FORUM!

In general, I think it's much better to first attempt to have a community conflict internally before I have it externally. This doesn't really apply to criminal behaviour or sexual abuse. I am centrally talking about disagreements, eg the Bostrom stuff, fallout around the FTX stuff, Nonlinear stuff, now this manifest stuff.

Why do I think this?

- If I want to credibly signal I will listen and obey norms, it seems better to start with a small discourse escalation rather than a large one. Starting a community discussion on twitter is like jumping straight to a shooting war.

- Many external locations (eg twitter, the press) have very skewed norms/incentives to the forum and so many parties can feel like they are the victim. I find when multiple parties feel they are weaker and victimised that is likely to cause escalation.

- Many spaces have less affordance for editing comments, seeing who agrees with who, having a respected mutual party say "woah hold up there"

- It is hard to say "I will abide by the community sentiment" if I have already started the discussion elsewhere in order to shame people. And if I don't intend to abide by the commu

This is also a argument for the forum's existence generally, if many of the arguments would otherwise be had on Twitter.

I want to once again congratulate the forum team on this voting tool. I think by doing this, the EA forum is at the forefront of internal community discussions. No communities do this well and it's surprising how powerful it is.

If anyone who disagrees with me on the manifest stuff who considers themselves inside the EA movement, I'd like to have some discussions with a focus on consensus-building. ie we chat in DMs and the both report some statements we agreed on and some we specifically disagreed on.

Edited:

@Joseph Lemien asked for positions I hold:

- The EA forum should not seek to have opinions on non-EA events. I don't mean individual EAs shouldn't have opinions, I mean that as a group we shouldn't seek to judge individual event. I don't think we're very good at it.

- I don't like Hanania's behaviour either and am a little wary of systems where norm breaking behaviour gives extra power, such as being endlessly edgy. But I will take those complaints to the manifold community internally.

- EAGs are welcome to invite or disinvite whoever CEA likes. Maybe one day I'll complain. But do I want EAGs to invite a load of manifest's edgiest speakers? Not particularly.

- It is fine for there to be spaces with discussion that I find ugly. If people want to go to these events, that's up to them.

- I dislike having unresolved conflicts which ossify into an inability to talk about things. Someone once told me tha

Where can i see the debate week diagram if I want to look back at it?

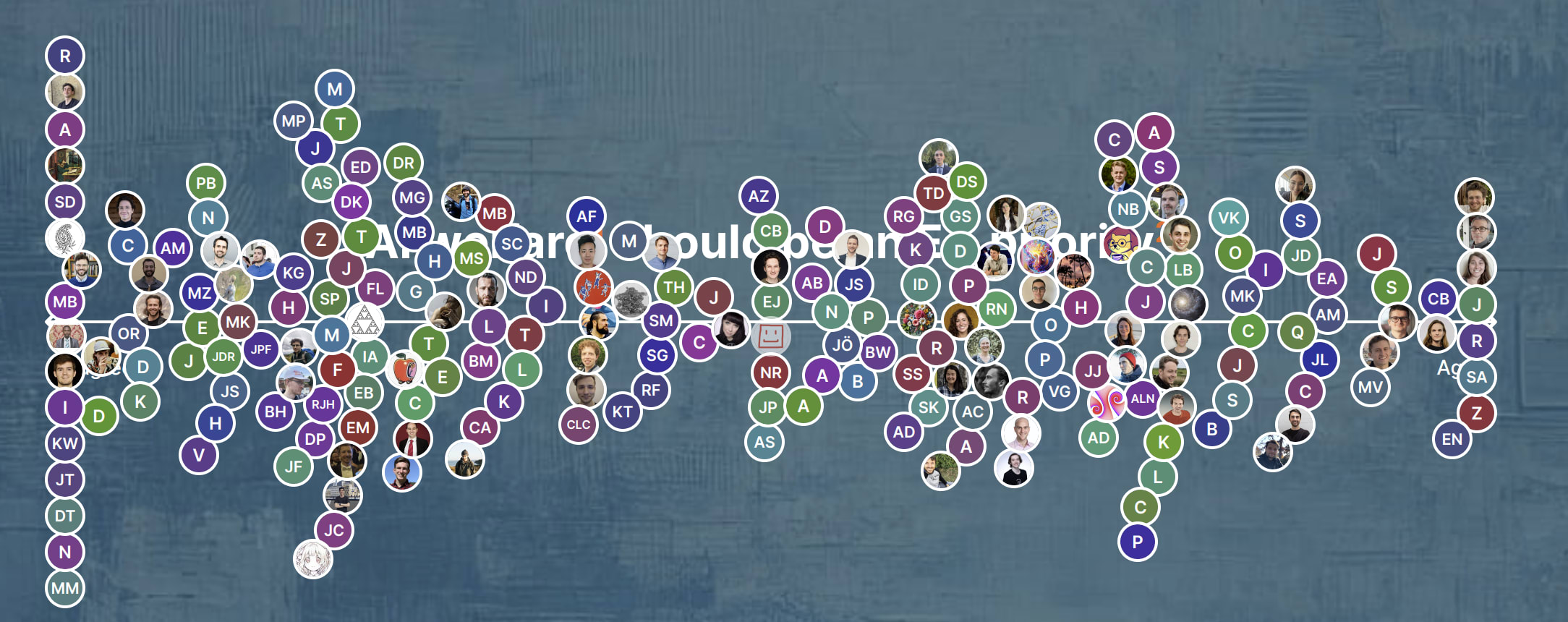

Here's a screenshot (open in new tab to see it in slightly higher resolution). I've also made a spreadsheet with the individual voting results, which gives all the info that was on the banner just in a slightly more annoying format.

We are also planning to add native way to look back at past events as they appeared on the site :), although this isn't a super high priority atm.

Suggestion.

Debate weeks every other week and we vote on what the topic is.

I think if the forum had a defined topic (especially) in advance, I would be more motivated to read a number of post on that topic.

One of the benefits of the culture war posts is that we are all thinking about the same thing. If we did that on topics perhaps with dialogues from experts, that would be good and on a useful topic.

An alternate stance on moderation (from @Habryka.)

This is from this comment responding to this post about there being too many bans on LessWrong. Note how the LessWrong is less moderated than here in that it (I guess) responds to individual posts less often, but more moderated in that I guess it rate limits people more without reason.

I found it thought provoking. I'd recommend reading it.

Thanks for making this post!

One of the reasons why I like rate-limits instead of bans is that it allows people to complain about the rate-limiting and to participate in discussion on their own posts (so seeing a harsh rate-limit of something like "1 comment per 3 days" is not equivalent to a general ban from LessWrong, but should be more interpreted as "please comment primarily on your own posts", though of course it shares many important properties of a ban).

This is a pretty opposite approach to the EA forum which favours bans.

... (read more)Things that seem most important to bring up in terms of moderation philosophy:

Moderation on LessWrong does not depend on effort

"Another thing I've noticed is that almost all the users are trying. They are trying to use rationality, trying to understan

This is a pretty opposite approach to the EA forum which favours bans.

If you remove ones for site-integrity reasons (spamming DMs, ban evasion, vote manipulation), bans are fairly uncommon. In contrast, it sounds like LW does do some bans of early-stage users (cf. the disclaimer on this list), which could be cutting off users with a high risk of problematic behavior before it fully blossoms. Reading further, it seems like the stuff that triggers a rate limit at LW usually triggers no action, private counseling, or downvoting here.

As for more general moderation philosophy, I think the EA Forum has an unusual relationship to the broader EA community that makes the moderation approach outlined above a significantly worse fit for the Forum than for LW. As a practical matter, the Forum is the ~semi-official forum for the effective altruism movement. Organizations post official announcements here as a primary means of publishing them, but rarely on (say) the effectivealtruism subreddit. Posting certain content here is seen as a way of whistleblowing to the broader community as a whole. Major decisionmakers are known to read and even participate in the Forum.

In contrast (although I am not... (read more)

Lab grown meat -> no-kill meat

This tweet recommends changing the words we use to discuss lab-grown meat. Seems right.

There has been a lot of discussion of this, some studies were done on different names, and GFI among others seem to have landed on "cultivated meat".

The front page agree disagree thing is soo coool. Great work forum team.

Looks great!

I tried to make it into a beeswarm, and while IMHO it does look nice it also needs a bunch more vertical space (and/or smaller circles)

I am not confident that another FTX level crisis is less likely to happen, other than that we might all say "oh this feels a bit like FTX".

Changes:

- Board swaps. Yeah maybe good, though many of the people who left were very experienced. And it's not clear whether there are due diligence people (which seems to be what was missing).

- Orgs being spun out of EV and EV being shuttered. I mean, maybe good though feels like it's swung too far. Many mature orgs should run on their own, but small orgs do have many replicable features.

- More talking about honesty. Not really sure this was the problem. The issue wasn't the median EA it was in the tails. Are the tails of EA more honest? Hard to say

- We have now had a big crisis so it's less costly to say "this might be like that big crisis". Though notably this might also be too cheap - we could flinch away from doing ambitious things

- Large orgs seem slightly more beholden to comms/legal to avoid saying or doing the wrong thing.

- OpenPhil is hiring more internally

Non-changes:

- Still very centralised. I'm pretty pro-elite, so I'm not sure this is a problem in and of itself, though I have come to think that elites in general are less competent than I thought before (see FTX and OpenAI crisis)

- Little discussion of why or how the affiliation with SBF happened despite many well connected EAs having a low opinion of him

- Little discussion of what led us to ignore the base rate of scamminess in crypto and how we'll avoid that in future

Some things I don't think I've seen around FTX, which are probably due to the investigation, but still seems worth noting. Please correct me if these things have been said.

- I haven't seen anyone at the FTXFF acknowledge fault for negligence in not noticing that a defunct phone company (north dimension) was paying out their grants.

- This isn't hugely judgemental from me, I think I'd have made this mistake too, but I would like it acknowledged at some point

- Since writing this it's been pointed out that there were grants paid from FTX and Alameda accounts also. Ooof.

The FTX Foundation grants were funded via transfers from a variety of bank

accounts, including North Dimension-8738 and Alameda-4456 (Primary Deposit Accounts), as

well as Alameda-4464 and FTX Trading-9018

- I haven't seen anyone at CEA acknowledge that they ran an investigation in 2019-2020 on someone who would turn out to be one of the largest fraudsters in the world and failed to turn up anything despite seemingly a number of flags.

- I remain confused

- As I've written elsewhere I haven't seen engagement on this point, which I find relatively credible, from one of the Time articles:

... (read more)"Bouscal recalled speaking to Mac Aulay

Certainly very concerning. Two possible mitigations though:

- Any finding of negligence would only apply to those with duties or oversight responsibilities relating to operations. It's not every employee or volunteer's responsibility to be a compliance detective for the entire organization.

- It's plausible that people made some due dilligence efforts that were unsuccessful because they were fed false information and/or relied on corrupt experts (like "Attorney-1" in the second interim trustee report). E.g., if they were told by Legal that this had been signed off on and that it was necessary for tax reasons, it's hard to criticize a non-lawyer too much for accepting that. Or more simply, they could have been told that all grants were made out of various internal accounts containing only corporate monies (again, with some tax-related justification that donating non-US profits through a US charity would be disadvantageous).

People voting without explaining is good.

I often see people thinking that this is bragading or something when actually most people just don't want to write a response, they either like or dislike something

If it were up to me I might suggest an anonymous "I don't know" button and an anonymous "this is poorly framed" button.

When I used to run a lot of facebook polls, it was overwhelmingly men who wrote answers, but if there were options to vote, the gender was much more even. My hypothesis was that a kind of argumentative usually man tended to enjoy writing long responses more. And so blocking lower effort/less antagonistic/ more anonymous responses meant I heard more from this kind of person.

I don't know if that is true on the forum, but I would guess that the higher effort it is to respond the more selective the responses become in some direction. I guess I'd ask if you think that the people spending the most effort are likely to be the most informed. In my experience, they aren't.

More broadly I think it would be good if the forum optionally took some information about users - location, income, gender, cause area, etc and on answers with more than say 10 votes would dis... (read more)

It seems like we could use the new reactions for some of this. At the moment they're all positive but there could be some negative ones. And we'd want to be able to put the reactions on top level posts (which seems good anyway).

I know of at least 1 NDA of an EA org silencing someone for discussing what bad behaviour that happened at that org. Should EA orgs be in the practice of making people sign such NDAs?

I suggest no.

Feels like we've had about 3 months since the FTX collapse with no kind of leadership comment. Uh that feels bad. I mean I'm all for "give cold takes" but how long are we talking.

The OpenAI stuff has hit me pretty hard. If that's you also, look after yourself.

I don't really know what accurate thought looks like here.

I am really not the person to do it, but I still think there needs to be some community therapy here. Like a truth and reconciliation committee. Working together requires trust and I'm not sure we have it.

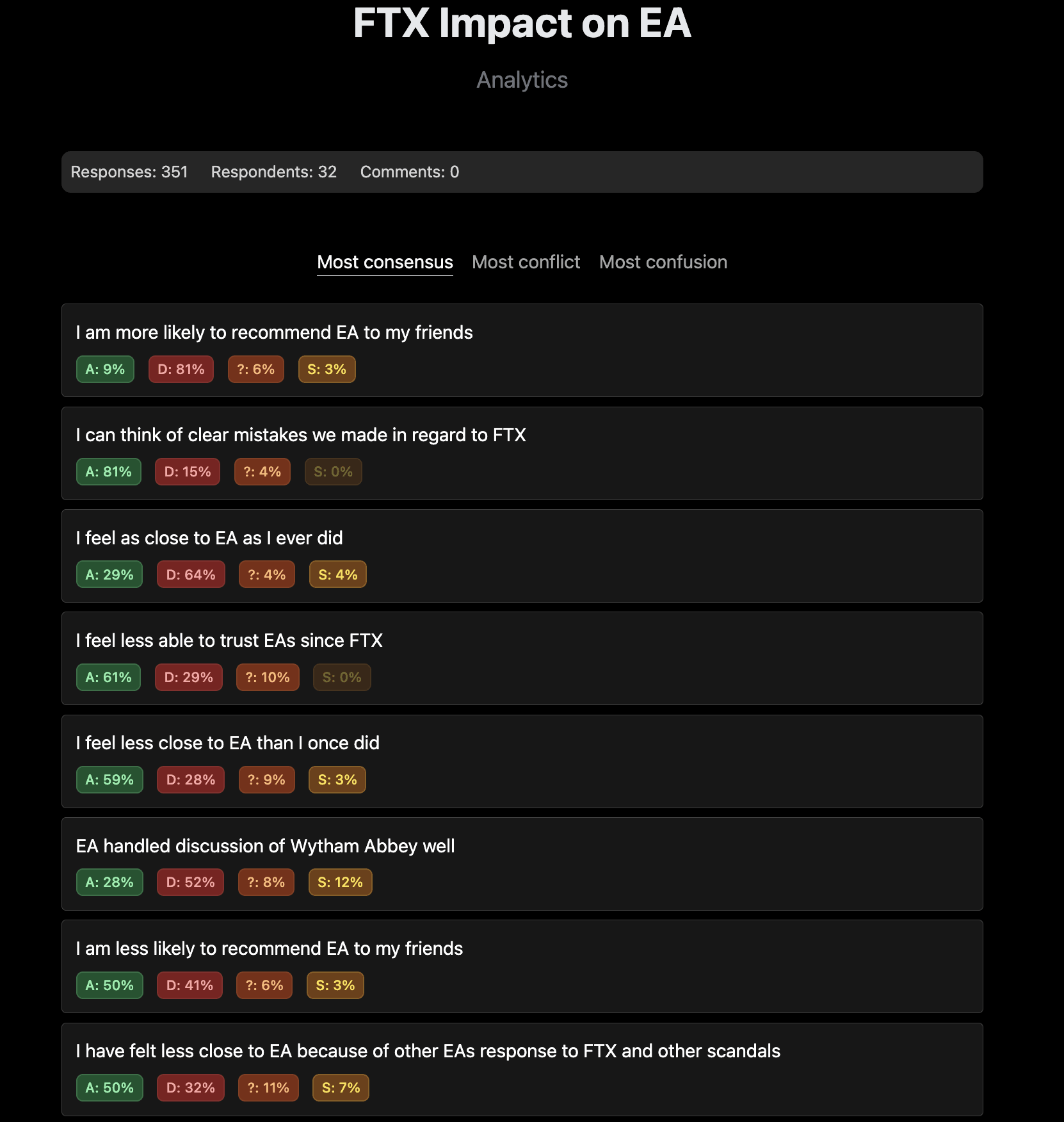

Poll: https://viewpoints.xyz/polls/ftx-impact-on-ea

Results: https://viewpoints.xyz/polls/ftx-impact-on-ea/results

I think the strategy fortnight worked really well. I suggest that another one is put in the calendar (for say 3 months time) and then rather than dripfeeding comment we sort of wait and then burst it out again.

It felt better to me, anyway to be like "for these two weeks I will engage"

I want to say thanks to people involved in the EA endeavour. I know things can be tough at times, but you didn't have to care about this stuff, but you do. Thank you, it means a lot to me. Let's make the world better!

I notice some people (including myself) reevaluating their relationship with EA.

This seems healthy.

When I was a Christian it was extremely costly for me to reduce my identification and resulted in a delayed and much more final break than perhaps I would have wished[1]. My general view is that people should update quickly, and so if I feel like moving away from EA, I do it when I feel that, rather than inevitably delaying and feeling ick.

Notably, reducing one's identification with the EA community need not change one's poise towards effective work/donations/earn to give. I doubt it will change mine. I just feel a little less close to the EA community than once I did, and that's okay.

I don't think I can give others good advice here, because we are all so different. But the advice I would want to hear is "be part of things you enjoy being part of, choose an amount of effort to give to effectiveness and try to be a bit more effective with that each month, treat yourself kindly because you too are a person worthy of love"

- ^

I think a slow move away from Christianity would have been healthier for me. Strangely I find it possible to imagine still being a Christian, had thi

I intend to strong downvote any article about EA that someone posts on here that they themselves have no positive takes on.

If I post an article, I have some reason I liked it. Even a single line. Being critical isn't enough on it's own. If someone posts an article, without a single quote they like, with the implication it's a bad article, I am minded to strong downvote so that noone else has to waste their time on it.

The Scout Mindset deserved 1/10th of the marketing campaign of WWOTF. Galef is a great figurehead for rational thinking and it would have been worth it to try and make her a public figure.

How are we going to deal emotionally with the first big newspaper attack against EA?

EA is pretty powerful in terms of impact and funding.

It seems only an amount of time before there is a really nasty article written about the community or a key figure.

Last year the NYT wrote a hit piece on Scott Alexander and while it was cool that he defended himself, I think he and the rationalist community overreacted and looked bad.

I would like us to avoid this.

If someone writes a hit piece about the community, Givewell, Will MacAskill etc, how are we going to avoid a kneejerk reaction that makes everything worse?

I suggest if and when this happens:

-

individuals largely don't respond publicly unless they are very confident they can do so in a way that leads to deescalation.

-

articles exist to get clicks. It's worth someone (not necessarily me or you) responding to an article in the NYT, but if, say a niche commentator goes after someone, fewer people will hear it if we let it go.

-

let the comms professionals deal with it. All EA orgs and big players have comms professionals. They can defend themselves.

-

if we must respond (we often needn't) we should adopt a stance of grace, curiosity and hu

Yeah, I think the community response to the NYT piece was counterproductive, and I've also been dismayed at how much people in the community feel the need to respond to smaller hit pieces, effectively signal boosting them, instead of just ignoring them. I generally think people shouldn't engage with public attacks unless they have training in comms (and even then, sometimes the best response is just ignoring).

Richard Ngo just gave a talk at EAG berlin about errors in AI governance. One being a lack of concrete policy suggestions.

Matt Yglesias said this a year ago. He was even the main speaker at EAG DC https://www.slowboring.com/p/at-last-an-ai-existential-risk-policy?utm_source=%2Fsearch%2Fai&utm_medium=reader2

Seems worth asking why we didn't listen to top policy writers when they warned that we didn't have good proposals.

I think 90% of the answer to this is risk aversion from funders, especially LTFF and OpenPhil, see here. As such many things struggled for funding, see here.

We should acknowledge that doing good policy research often involves actually talking to and networking with policy people. It involves running think tanks and publishing policy reports, not just running academic institutions and publishing papers. You cannot do this kind of research well in a vacuum.

That fact combined with funders who were (and maybe still are) somewhat against funding people (except for people they knew extremely well) to network with policy makers in any way, has lead to (maybe is still leading to) very limited policy research and development happening.

I am sure others could justify this risk averse approach, and there are totally benefits to being risk averse. However in my view this was a mistake (and is maybe an ongoing mistake). I think was driven by the fact that funders were/are: A] not policy people, so do/did not understand the space so are were hesitant to make grants; B] heavily US centric, so do/did not understand the non-US policy space; and C] heavily capacity constrained, so do/did ... (read more)

Joe Rogan (largest podcaster in the world) giving repeated concerned mediocre x-risk explanations suggests that people who have contacts with him should try and get someone on the show to talk about it.

eg listen from 2:40:00 Though there were several bits like this during the show.

I hope Will MacAskill is doing well. I find it hard to predict how he's doing as a person. While there have been lots of criticisms (and I've made some) I think it's tremendously hard to be the Shelling person for a movement. There is a seperate axis however, and I hope in himself he's doing well and I imagine many feel that way. I hope he has an accurate picture here.

The vibe at EAG was chill, maybe a little downbeat, but fine. I can get myself riled up over the forum, but it's not representative! Most EAs are just getting on with stuff.

(This isn't to say that forum stuff isn't important, its just as important as it is rather than what should define my mood)

I've said that people voting anonymously is good, and I still think so, but when I have people downvoting me for appreciating little jokes that other people most on my shortform, I think we've become grumpy.

Sam Harris takes Giving What We Can pledge for himself and for his meditation company "Waking Up"

Harris references MacAksill and Ord as having been central to his thinking and talks about Effective Altruism and exstential risk. He publicly pledges 10% of his own income and 10% of the profit from Waking Up. He also will create a series of lessons on his meditation and education app around altruism and effectiveness.

Harris has 1.4M twitter followers and is a famed Humanist and New Athiest. The Waking Up app has over 500k downloads on android, so I guess over 1 million overall.

https://dynamic.wakingup.com/course/D8D148

I like letting personal thoughts be up or downvoted, so I've put them in the comments.

Harris is a marmite figure - in my experience people love him or hate him.

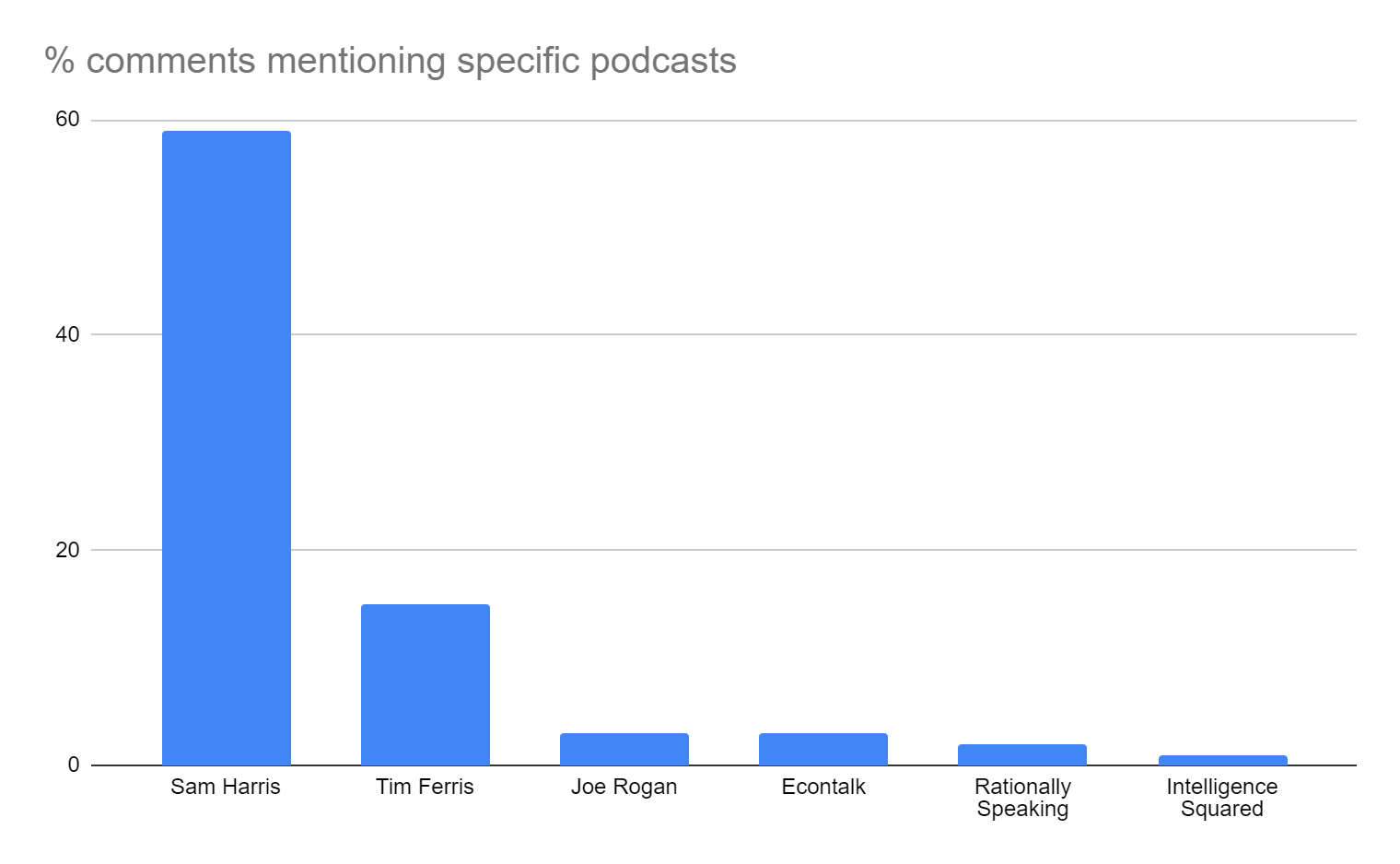

My guess is people who like Sam Harris are disproportionately likely to be potentially interested in EA.

This seems quite likely given EA Survey data where, amongst people who indicated they first heard of EA from a Podcast and indicated which podcast, Sam Harris' strongly dominated all other podcasts.

More speculatively, we might try to compare these numbers to people hearing about EA from other categories. For example, by any measure, the number of people in the EA Survey who first heard about EA from Sam Harris' podcast specifically is several times the number who heard about EA from Vox's Future Perfect. As a lower bound, 4x more people specifically mentioned Sam Harris in their comment than selected Future Perfect, but this is probably dramatically undercounting Harris, since not everyone who selected Podcast wrote a comment that could be identified with a specific podcast. Unfortunately, I don't know the relative audience size of Future Perfect posts vs Sam Harris' EA podcasts specifically, but that could be used to give a rough sense of how well the different audiences respond.

Feels like there should be a "comment anonymously" feature. Would save everyone having to manage all these logins.

We have thought about that. Probably the main reason we haven't done this is because of this reason, on which I'll quote myself on from an internal slack message:

Currently if someone makes an anon account, they use an anonymous email address. There's usually no way for us, or, by extension, someone who had full access to our database, to deanonymize them. However, if we were to add this feature, it would tie the anonymous comments to a primary account. Anyone who found a vulnerability in that part of the code, or got an RCE on us, would be able post a dump that would fully deanonymize all of those accounts.

I continue to think that a community this large needs mediation functions to avoid lots of harm with each subsequent scandal.

People asked for more details. so I wrote the below.

Let's look at some recent scandals and I'll try and point out some different groups that existed.

- FTX - longtermists and non-lontermists, those with greater risk tolerance and less

- Bostrom - rationalists and progressives

- Owen Cotton-Barrett - looser norms vs more robust, weird vs normie

- Nonlinear - loyalty vs kindness, consent vs duty of care

In each case, the community disagrees on who we should be and what we should be. People write comments to signal that they are good and want good things and shouldn't be attacked. Other people see these and feel scared that they aren't what the community wants.

This is tiring and anxiety inducing for all parties. In all cases here there are well intentioned, hard working people who have given a lot to try and make the world better who are scared they cannot trust their community to support them if push comes to shove. There are people horrified at the behaviour of others, scared that this behaviour will repeat itself, with all the costs attached. I feel this way, and I ... (read more)

I'd bid for you to explain more what you mean here - but it's your quick take!

Post I spent 4 hours writing on a topic I care deeply about: 30 karma

Post I spent 40 minutes writing on a topic that the community vibes with: 120 karma

I guess this is fine - iys just people being interested but it can feel weird at times.

Confusion

I get why I and other give to Givewell rather than catastrophic risk - sometimes it's good to know your "Impact account" is positive even if all the catastrophic risk work was useless.

But why do people not give to animal welfare in this case? Seems higher impact?

And if it's just that we prefer humans to animals that seems like something we should be clear to ourselves about.

Also I don't know if I like my mental model of an "impact account". Seems like my giving has maybe once again become about me rather than impact.

ht @Aaron Bergman for surfacing this

Dear reader,

You are an EA, if you want to be. Reading this forum is enough. Giving a little of your salary effectively is enough. Trying to get an impactful job is enough. If you are trying even with a fraction of your resources to make the world better and chatting with other EAs about it, you are one too.

I talked to someone outside EA the other day who said that in a competive tender they wouldn't apply to EA funders because they thought the process would likely to go to someone with connections to OpenPhil.

Seems bad.

A previous partner and I did a sex and consent course together online I think it's helped me be kinder in relationships.

Useful in general.

More useful if, you:

- have sex casually

- see harm in your relationships and want to grow

- are poly

As I've said elsewhere I think a very small proportion of people in EA are responsible for most of the relationship harms. Some of bad actors, who need to be removed, some are malefactors, who have either lots of interactions or engage in high risk behaviours and accidentally cause harm. I would guess I have more traits of the second category than almost all of you. So people like me should do the most work to change.

So most of you probably don't need this, but if you are in some of the above groups, I'd recommend a course like this. Save yourself the heartache of upsetting people you care about.

Happy to DM.

Does anyone understand the bottlenecks to a rapid malaria vaccine rollout? Feels underrated.

Best sense of what's going on (my info's second-hand) is it would cost ~$600M to buy and distribute all of Serum Institute's supply (>120M doses $3.90 dose +~$1/dose distribution cost) and GAVI doesn't have any new money to do so. So they're possibly resistant to moving quickly, which may be slowing down the WHO prequalification process, which is a gating item for the vaccine being put in vials and purchased by GAVI (via UNICEF). Natural solution for funding is for Gates to lead an effort to do so, but they are heavy supporters of the RTS,S malaria vaccine, so it's awkward for them to put major support into the new R21 vaccine which can be produced in large quantity. Also the person most associated with R21 is Adrian Hill, who is not well-liked in the malaria field. There will also be major logistical hurdles to getting it distributed in the countries, and there are a number of bureaucracies internally in each of the countries that will all need to cooperate.

Here's an op-ed my colleague Zach, https://foreignpolicy.com/2023/12/08/new-malaria-vaccine-africa-world-health-organization-child-mortality/

Here's one from Peter Singer https://www.project-syndicate.org/commentary/new... (read more)

Relative Value Widget

It gives you sets of donations and you have to choose which you prefer. If you want you can add more at the bottom.

https://allourideas.org/manifund-relative-value

so far:

Several journalists (including those we were happy to have write pieces about WWOTF) have contacted me but I think if I talk to them, even carefully, my EA friends will be upset with me. And to be honest that upsets me.

We are in the middle of a mess of our own making. We deserve scrutiny. Ugh, I feel dirty and ashamed and frustrated.

To be clear, I think it should be your own decision to talk to journalists, but I do also just think that it's just better for us to tell our own story on the EA Forum and write comments, and not give a bunch of journalists the ability to greatly distort the things we tell them in a call, with a platform and microphone that gives us no opportunity to object or correct things.

I have been almost universally appalled at the degree to which journalists straightforwardly lie in interviews, take quotes massively out of context, or make up random stuff related to what you said, and I do think it's better that if you want to help the world understand what is going on, that you write up your own thoughts in your own context, instead of giving that job to someone else.

I have heard one anecdote of an EA saying that they would be less likely to hire someone on the basis of their religion because it would imply they were less good at their job less intelligent/epistemically rigorous. I don't think they were involved in hiring, but I don't think anyone should hold this view.

Here is why:

- As soon as you are in a hiring situation, you have much more information than priors. Even if it were true that, say, ADHD[1] were less rational then the interview process should provide much more information than such a prior. If that's not the case, get a better interview process, don't start being prejudiced!

- People don't mind meritocracy, but they want a fair shake. If I heard that people had a prior that ADHD folks were less likely to be hard working, regardless of my actual performance in job tests, I would be less likely to want to be part of this community. You might lose my contributions. It seems likely to me that we come out ahead by ignoring small differences in groups so people don't have to worry about this. People are very sensitive to this. Let's agree not to defect. We judge on our best guess of your performance, not on appearances.

- I would b

I would be unsurprised if this kind of thinking cut only one way. Is anyone suggesting they wouldn't hire poly people because of the increased drama or men because of the increased likelihood of sexual scandal?

In the wake of the financial crisis it was not uncommon to see suggestions that banks etc. should hire more women to be traders and risk managers because they would be less temperamentally inclined towards excessive risk taking.

I think EAs have a bit of an entitlement problem.

Sometimes we think that since we are good we can ignore the rules. Seems bad

As with many statements people make about people in EA, I think you've identified something that is true about humans in general.

I think it applies less to the average person in EA than to the average human. I think people in EA are more morally scrupulous and prone to feeling guilty/insufficiently moral than the average person, and I suspect you would agree with me given other things you've written. (But let me know if that's wrong!)

I find statements of the type "sometimes we are X" to be largely uninformative when "X" is a part of human nature.

Compare "sometimes people in EA are materialistic and want to buy too many nice things for themselves; EA has a materialism problem" — I'm sure there are people in EA like this, and perhaps this condition could be a "problem" for them. But I don't think people would learn very much about EA from the aforementioned statements, because they are also true of almost every group of people.

I might start doing some policy BOTEC (Back of the envelope calculation) posts. ie where I suggest an idea and try and figure out how valuable it is. I think that do this faster with a group to bounce ideas off.

If you'd like to be added to a message chat (on whatsapp probably) to share policy BOTECs then reply here or DM me.

Being able to agree and disagreevote on posts feels like it might be great. Props to the forum team.

Can we have some people doing AI Safety podcast/news interviews as well as Yud?

I am concerned that he's gonna end up being the figurehead here. I assume someone is thinking of this, but I'm posting here to ensure that it is said. I am pretty sure that people are working on this, but I think it's good to say this anyway.

We aren't a community who says "I guess he deserves it" we say "who is the best person for the job?". Yudkowsky, while he is an expert isn't a median voice. His estimates of P(doom) are on the far tail of EA experts here. So if I could pick 1 person I wouldn't pick him and frankly I wouldn't pick just one person.

Some other voices I'd like to see on podcasts/ interviews:

- Toby Ord

- Paul Christiano

- Ajeya Cotra

- Amanda Askell

- Will MacAskill

- Joe Carlsmith*

- Katja Grace*

- Matthew Barnett*

- Buck Schlegeris

- Luke Meulhauser

Again, I'm not saying noone has thought of this (80%) they have. But I'd like to be 97% sure, so I'm flagging it.

*I am personally fond of this person so am biased

I notice I am pretty skeptical of much longtermist work and the idea that we can make progress on this stuff just by thinking about it.

I think future people matter, but I will be surprised if, after x-risk reduction work, we can find 10s of billions of dollars of work that isn't busywork and shouldn't be spent attempting to learn how to get eg nations out of poverty.

If you type "#" follwed by the title of a post and press enter it will link that post.

Example:

Examples of Successful Selective Disclosure in the Life Sciences

This is wild

The shifts in forum voting patterns across the EU and US seem worthy of investigation.

I'm not saying there is some conspiracy, it seems pretty obvious that EU and US EAs have different views and that appears in voting patterns but it seems like we could have more self knowledge here.

I sense that it's good to publicly name serial harassers who have been kicked out of the community, even if the accuser doesn't want them to be. Other people's feeling matter too and I sense many people would like to know who they are.

I think there is a difference between different outcomes, but if you've been banned from EA events then you are almost certainly someone I don't want to invite to parties etc.

Is EA as a bait and switch a compelling argument for it being bad?

I don't really think so

- There are a wide variety of baits and switches, from what I'd call misleading to some pretty normal activities - is it a bait and switch when churches don't discuss their most controversial beliefs at a "bring your friends" service? What about wearing nice clothes to a first date? [1]

- EA is a big movement composed of different groups[2]. Many describe it differently.

- EA has done so much global health stuff I am not sure it can be described as a bait and switch. eg h

I would appreciate being able to vote forum articles as both agree disagree and upvote downvote.

Lots of things where I think they are false but interesting or true but boring.

I guess African, Indian and Chinese voices are underrepresented in the AI Governance discussion. And in the unlikely case we die, we all die and it think it's weird that half the people who will die have noone loyal to them in the discussion.

We want AI that works for everyone and it seems likely you want people who can represent billions who aren't currently with a loyal representative.

I'm actually more concerned about the underrepresentation of certain voices as it applies to potential adverse effects of AGI (or even near-AGI) on society that don't involve all of us dying. In the everyone-dies scenario, I would at least be similarly situated to people from Africa, India, and China in terms of experiencing the exact same bad thing that happens. But there are potential non-fatal outcomes, like locking in current global power structures and values, that affect people from non-Western countries much differently (and more adversely) than they'd affect people like me.

Unbalanced karma is good actually. it means that the moderators have to do less. I like the takes of the top users more than the median user and I want them to have more but not total influence.

Appeals to fairness don't interest me - why should voting be fair?

I have more time for transparency.

EAs please post your job posting to twitter

Please post your jobs to Twitter and reply with @effective_jobs. Takes 5 minutes. and the jobs I've posted and then tweeted have got 1000s of impressions.

Or just DM me on twitter (@nathanpmyoung) and I'll do it. I think it's a really cheap way of getting EAs to look at your jobs. This applies to impactful roles in and outside EA.

Here is an example of some text:

-tweet 1

Founder's Pledge Growth Director

@FoundersPledge are looking for someone to lead their efforts in growing the amount that tech entrepreneurs give to effective charities when they IPO.

Salary: $135 - $150k

Location: San Francisco

https://founders-pledge.jobs.personio.de/job/378212

-tweet 2, in reply

@effective_jobs

-end

I suggest it should be automated but that's for a different post.

I notice we are great at discussing stuff but not great at coming to conclusions.

I wish the forum had a better setting for "I wrote this post and maybe people will find it interesting but I don't want it on the front page unless they do because that feels pretenious"

I make a quick (and relatively uncontroversial) poll on how people are feeling about EA. I'll share if we get 10+ respondents.

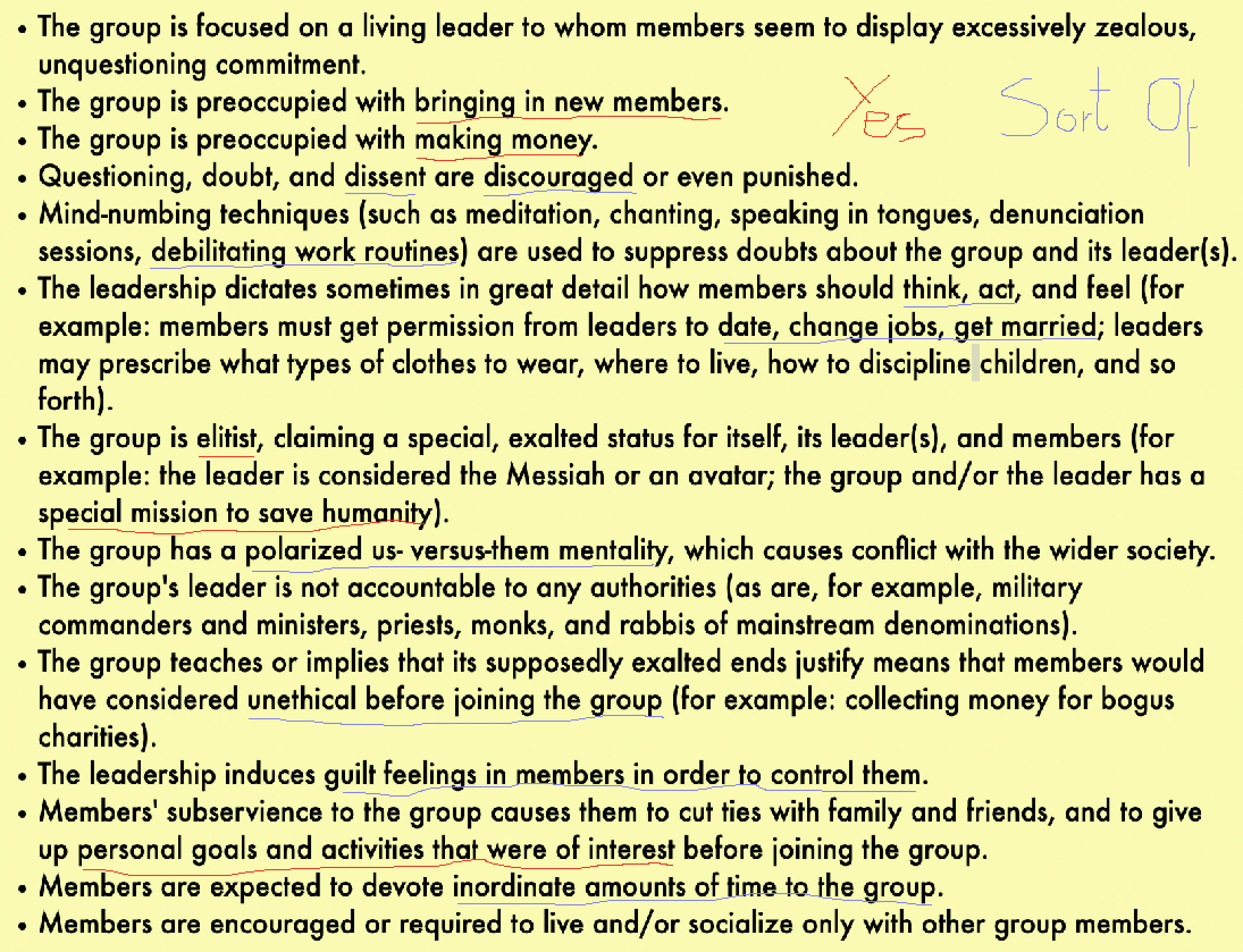

Seems worth considering that

A) EA has a number of characteristic of a "High Demand Group" (cult). This is a red flag and you should wrestle with it yourself.

B) Many of the "Sort of"s are peer pressure. You don't have to do these things. And if you don't want to, don't!

In what sense is it "sort of" true that members need to get permission from leaders to date, change jobs, or marry?

I think that one's a reach, tbh.

(I also think the one about using guilt to control is a stretch.)

My call: EA gets 3.9 out of 14 possible cult points.

The group is focused on a living leader to whom members seem to display excessively zealous, unquestioning commitment.

No

The group is preoccupied with bringing in new members.

Yes (+1)

The group is preoccupied with making money.

Partial (+0.8)

Questioning, doubt, and dissent are discouraged or even punished.

No

Mind-numbing techniques (such as meditation, chanting, speaking in tongues, denunciation sessions, debilitating work routines) are used to suppress doubts about the group and its leader(s).

No

The leadership dictates sometimes in great detail how members should think, act, and feel (for example: members must get permission from leaders to date, change jobs, get married; leaders may prescribe what types of clothes to wear, where to live, how to discipline children, and so forth).

No

The group is elitist, claiming a special, exalted status for itself, its leader(s), and members (for example: the leader is considered the Messiah or an avatar; the group and/or the leader has a special mission to save humanity).

Partial (+0.5)

... (read more)The group has a polarized us- versus-them mentality, which causes conflict with the w

I don't think it makes sense to say that the group is "preoccupied with making money". I expect that there's been less focus on this in EA than in other groups, although not necessarily due to any virtue, but rather because of how lucky we have been in having access to funding.

edited

Give Directly has a President (Rory Stewart) paid $600k, and is hiring a Managing Director. I originally thought they had several other similar roles (because I looked on the website) but I talked to them an seemingly that is not the case. Below is the tweet that tipped me off but I think it is just mistaken.

Once could still take issue with the $600k (though I don't really)

https://twitter.com/carolinefiennes/status/1600067781226950656?s=20&t=wlF4gg_MsdIKX59Qqdvm1w

Seems in line with CEO pay for US nonprofits with >100M in budget, at least when I spot check random charities near the end of this list.

I feel confused about the president/CEO distinction however.

I strongly dislike the following sentence on effectivealtruism.org:

"Rather than just doing what feels right, we use evidence and careful analysis to find the very best causes to work on."

It reads to me as arrogant, and epitomises the worst caracatures my friends do of EAs. Read it in a snarky voice (such as one might if they struggled with the movement and were looking to do research) "Rather that just doing what feels right..."

I suggest it gets changed to one of the following:

- "We use evidence and careful analysis to find the very best causes to work on."

- "It's great when anyone does a kind action no matter how small or effective. We have found value in using evidence and careful analysis to find the very best causes to work on."

I am genuinely sure whoever wrote it meant well, so thank you for your hard work.

Are the two bullet points two alternative suggestions? If so, I prefer the first one.

I would like to see posts give you more karma than comments (which would hit me hard). Seems like a highly upvtoed post is waaaaay more valuable than 3 upvoted comments on that post, but it's pretty often the latter gives more karma than the former.

Someone told me they don't bet as a matter of principle. And that this means EA/Rats take their opinions less seriously as a result. Some thoughts

- I respect individual EAs preferences. I regularly tell friends to do things they are excited about, to look after themselves, etc etc. If you don't want to do something but feel you ought to, maybe think about why, but I will support you not doing it. If you have a blanket ban on gambling, fair enough. You are allowed to not do things because you don't want to

- Gambling is addictive, if you have a problem with it,

I don't bet because I feel it's a slippery slope. I also strongly dislike how opinions and debates in EA are monetised, as this strengthens even more the neoliberal vibe EA already has, so my drive for refraining to do this in EA is stronger than outside.

Edit: and I too have gotten dismissed by EAs for it in the past.

Being open minded and curious is different from holding that as part of my identity.

Perhaps I never reach it. But it seems to me that "we are open minded people so we probably behave open mindedly" is false.

Or more specifically, I think that it's good that EAs want to be open minded, but I'm not sure that we are purely because we listen graciously, run criticism contests, talk about cruxes.

The problem is the problem. And being open minded requires being open to changing one's mind in difficult or set situations. And I don't have a way that's guaranteed to get us over that line.

Clear benefits, diffuse harms

It is worth noting when systems introduce benefits in a few obvious ways but many small harms. An example is blocking housing. It benefits the neighbours a lot - they don't have to have construction nearby - and the people who are harmed are just random marginal people who could have afforded a home but just can't.

But these harms are real and should be tallied.

Much recent discussion in EA has suggested common sense risk reduction strategies which would stop clear bad behavior. Often we all agree on the clear bad behaviour... (read more)

I've been musing about some critiques of EA and one I like is "what's the biggest thing that we are missing"

In general, I don't think we are missing things (lol) but here are my top picks:

- It seems possible that we reach out to sciency tech people because they are most similar to us. While this may genuinely be the cheapest way to get people now there may be costs to the community in terms of diversity of thought (most Sci/tech people are more similar than the general population)

- I'm glad to see more outreach to people in developing nations

- It seems obvious t

I think if I knew that I could trade "we all obey some slightly restrictive set of romance norms" for "EA becomes 50% women in the next 5 years" then that's a trade I would advise we take.

That's a big if. But seems trivially like the right thing to do - women do useful work and we should want more of them involved.

To say the unpopular reverse statement, if I knew that such a set of norms wouldn't improve wellbeing in some average of women in EA and EA as a whole then I wouldn't take the trade.

Seems worth acknowledging there are right answers here, if only we knew the outcomes of our decisions.

In defence of Will MacAskill and Nick Beckstead staying on the board of EVF

While I've publicly said that on priors they should be removed unless we hear arguments otherwise, I was kind of expecting someone to make those arguments. If noone will, I will.

MacAskill

MacAskill is very clever, personally kind, is a superlative networker and communicator. Imo he oversold SBF, but I guess I'd do much worse in his place. It seems to me that we should want people who have made mistakes and learned from them. Seems many EA orgs would be glad to have someone like... (read more)

I suggest there is waaaay to much to be on top of in EA and noone knows who is checking what. So some stuff goes unchecked. If there were a narrower set of "core things we study" then it seems more likely that those things would have been gone over by someone in detail and hence fewer errors in core facts.

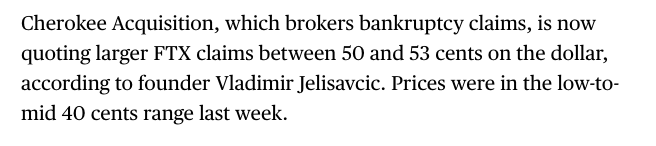

It has an emotional impact on me to note that FTX claims are now trading at 50%. This means that on expectation, people are gonna get about half of what their assets were worth, had they help them until this time.

I don't really understand whether it should change the way we understand the situation, but I think a lot of people's life savings were wrapped up here and half is a lot better than nothing.

I am not confident on the re... (read more)

Feels like there should be some kind of community discussion and research in the wake of FTX, especially if no leadership is gonna do it. But I don't know how that discussion would have legitimacy. I'm okay at such things, but honestly tend to fuck them up somehow. Any ideas?

If I were king

- Use the ideas from all the varous posts

- Have a big google doc where anyone can add research and also put a comment for each idea and allow people to discuss

- Then hold another post where we have a final vote on what should happen

- then EA orgs can see at least what some kind of community concensus things

- And we can see what each other think

I wrote a post on possible next steps but it got little engagement -- unclear if it was a bad post or people just needed a break from the topic. On mobile, so not linking it -- but it's my only post besides shortform.

The problem as I see it is that the bulk of proposals are significantly underdeveloped, risking both applause light support and failure to update from those with skeptical priors. They are far too thin to expect leaders already dealing with the biggest legal, reputational, and fiscal crisis in EA history to do the early development work.

Thus, I wouldn't credit a vote at this point as reflecting much more than a desire for a more detailed proposal. The problem is that it's not reasonable to expect people to write more fleshed-out proposals for free without reason to believe the powers-that-be will adopt them.

I suggested paying people to write up a set of proposals and then voting on those. But that requires both funding and a way to winnow the proposals and select authors. I suggested modified quadratic funding as a theoretical ideal, but a jury of pro-reform posters as a more practical alternative. I thought that problem was manageable, but it is a problem. In particular, at the proposal-development stage, I didn't want tactical voting by reform skeptics.

Let's assume that the time article is right about the amount of sexual harassment in EA. How big a problem is this relative to other problems? If we spend $10mn on EAGs (a guess) how much should we spend if we could halve sexual harassment in the community.

Nuclear risk is in the news. I hope:

- if you are an expert on nuclear risk, you are shopping around for interviews and comment

- if you are an EA org that talks about nuclear risk, you are going to publish at least one article on how the current crisis relates to nuclear risk or find an article that you like and share it

- if you are an EA aligned journalist, you are looking to write an article on nuclear risk and concrete actions we can take to reduce it

I'll sort of publicly flag that I sort of break the karma system. Like the way I like to post comments is little and often and this is just overpowered in getting karma.

eg I recently overtook Julia Wise and I've been on the forum for years less than anyone else.

I don't really know how to solve this - maybe someone should just 1 time nuke my karma? But yeah it's true.

Note that I don't do this deliberately - it's just how I like to post and I think it's honestly better to split up ideas into separate comments. But boy is it good at getting karma. And soooo m... (read more)

To modify a joke I quite liked:

Having EA Forum karma tells you two things about a person:

- They had the potential to have had a high impact in EA-relevant ways

- They chose not to.

I wouldn't worry too much about the karma system. If you're worried about having undue power in the discourse, one thing I've internalized is to use the strong upvote/downvote buttons very sparingly (e.g. I only strong-upvoted one post in 2022 and I think I never strong-downvoted any post, other than obvious spam).

A friend asked about effective places to give. He wanted to donate through his payroll in the UK. He was enthusiastic about it, but that process was not easy.

- It wasn't particularly clear whether GiveWell or EA Development Fund was better and each seemed to direct to the other in a way that felt at times sketchy.

- It wasn't clear if payroll giving was an option

- He found it hard to find GiveWell's spreadsheet of effectiveness

Feels like making donations easy should be a core concern of both GiveWell and EA Funds and my experience made me a little embarrassed to be honest.

I am not particularly excited to discuss Nonlinear in any way, but I note I'd prefer to discuss it on LessWrong rather than here.

Why is this?

- I dunno

- Feels like it's gonna be awful discourse

- The thing I actually want to do is go over things point by point but I feel here it's gonna get all fraught and statusy

Why I like the donation election

I have some things I do like and then some clarifications.

I like that we are trying new mechanisms. If we were going to try and be a community that lasts we need to build ways of working that don't have the failure modes that others have had in the past. I'm not particularly optimistic about this specific donation election, but I like that we are doing it. For this reason I've donated a little and voted.

I don't think this specific donation election mechanism adds a lot. Money already gets allocated on a kind of voting ... (read more)

Factional infighting

[epistemic status - low, probably some element are wrong]

tl;dr

- communities have a range of dispute resolution mechanisms, whether voting to public conflict to some kind of civil war

- some of these are much better than others

- EA has disputes and resources and it seems likely that there will be a high profile conflict at some point

- What mechanisms could we put in place to handle that conflict constructively and in a positive sum way?

When a community grows as powerful as EA is, there can be disagreements about resource allocation. ... (read more)

I dislike the framing of "considerable" and "high engagement" on the EA survey.

This copied from the survey:

... (read more)

- No engagement: I’ve heard of effective altruism, but do not engage with effective altruism content or ideas at all

- Mild engagement: I’ve engaged with a few articles, videos, podcasts, discussions, events on effective altruism (e.g. reading Doing Good Better or spending ~5 hours on the website of 80,000 Hours)

- Moderate engagement: I’ve engaged with multiple articles, videos, podcasts, discussions, or events on effective altruism (e.g. subscribing to the

It is frustrating that I cannot reply to comments from the notification menu. Seems like a natural thing to be able to do.

Does EA have a clearly denoted place for exit interviews? Like if someone who was previously very involved was leaving, is there a place they could say why?

We are good at discussion but bad at finding the new thing to update to.

Look at the recent Happier Lives Institute discussion; https://forum.effectivealtruism.org/posts/g4QWGj3JFLiKRyxZe/the-happier-lives-institute-is-funding-constrained-and-needs

Lots of discussion, a reasonable amount of new information, but what should our final update be:

- Have HLI acted fine or badly?

- Is there a pattern of misquoting and bad scholarship?

- Have global health orgs in general moved towards Self-reported WellBeing (SWB) as a way to measure interventions?

- Has HLI generally done g

It is just really hard to write comments that challenge without seeming to attack people. Anyone got any tips?

EA short story competition?

Has anyone ever run a competition for EA related short stories?

Why would this be a good idea?

* Narratives resonate with people and have been used to convey ideas for 1000s of years

* It would be low cost and fun

* Using voting on this forum there is the same risk of "bad posts" as for any other post

How could it work?

* Stories submitted under a tag on the EA forum.

* Rated by upvotes

* Max 5000 words (I made this up, dispute it in the comments)

* If someone wants to give a reward, then there could be a prize for the highest rated

* If there is a lot of interest/quality they could be collated and even published

* Since it would be measured by upvotes it seems unlikely a destructive story would be highly rated (or as likely as any other destructive post on the forum)

Upvote if you think it's a good idea. If it gets more than 40 karma I'll write one.

EA infrastructure idea: Best Public Forecaster Award

- Gather all public forecasting track records

- Present them in an easily navigable form

- Award prizes one for best brier score of forecasts resolving in the last year

If this gets more than 20 karma, I'll write a full post on it. This is rough.

Questions that come to mind

Where would we find these forecasts

To begin with I would look at those with public records:

- Scott Alexander

- Bryan Caplan

- Matthew Yglesias

- Many such cases

Beyond these, one could build a community around finding forecasts of public fi... (read more)

There is no EA "scene" on twitter.

For good or ill, while there are posters on twitter who talk about EA, there isn't a "scene" (a space where people use loads of EA jargon and assume everyone is EA) or at least not that I've seen.

This surprised me.

The amount of content on the forum is pretty overwhelming at the moment and I wonder if there is a better way to sort it.

I think the EA forum wiki should allow longer and more informative articles. I think that it would get 5x traffic. So I've created a market to bet.

Some thoughts on: https://twitter.com/FreshMangoLassi/status/1628825657261146121?s=20

I agree that it’s worth saying something about sexual behaviour. Here are my broad thoughts:

- I am sad about women having bad experiences, I think about it a lot

- I want to be accurate in communication

- I think it's easy to reduce harms a lot without reducing benefits

Firstly, I’m sad about the current situation. Seems like too many women in EA have bad experiences. There is a discussion about what happens in other communities or tradeoffs. But first it’s really sad.

More th... (read more)

Question answers

When answering questions, I recommend people put each separate point as a separate answer. The karma ranking system is useful to see what people like/don't like and having a whole load of answers together muddies the water.

It was pointed out to me that I probably vote a bit wrong on posts.

I generally just up and downvote how I feel, but occasionally if I think a post is very overrated or underrated I will strong upvote or downvote even though I feel less strong than that.

But this is I think the wrong behaviour and a defection. Since if we all did that then we'd all be manipulating the post to where we think it ought to be and we'd lose the information held in the median of where all our votes leave it.

Sorry.

What is a big open factual non community question in EA. I have a cool discussion tool I want to try out.

Should we want openai to turn off bing for a bit? We should, right? Should we create memes to that effect?

This could have been a wiki

I hold that there could be a well maintained wiki article on top EA orgs and then people could anonymously have added many non-linear stories a while ago. I would happily have added comments about their move fast and break things approach and maybe had a better way to raise it with them.

There would have been edit wars and an earlier investigation.

How much would you pay to have brought this forward 6 months or a year. And likewise for whatever other startling revelations there are. In which case, I suggest a functional wiki is worth 5% - 10% of that amount, per case.

EA global

1) Why is EA global space constrained? Why not just have a larger venue?

I assume there is a good reason for this which I don't know.

2) It's hard to invite friends to EA global. Is this deliberate?

I have a close friend who finds EA quite compelling. I figured I'd invite them to EA global. They were dissuaded by the fact they had to apply and that it would cost $400.

I know that's not the actual price, but they didn't know that. I reckon they might have turned up for a couple of talks. Now they probably won't apply.

Is there no way that this event could be more welcoming or is that not the point?

Re 1) Is there a strong reason to believe that EA Global is constrained by physical space? My impression is that they try to optimize pretty hard to have a good crowd and for there to be a high density of high-quality connections to be formed there.

Re 2) I don't think EA Global is the best way for newcomers to EA to learn about EA.

EDIT: To be clear, neither 1) nor 2) are necessarily endorsements of the choice to structure EA Global in this way, just an explanation of what I think CEA is optimizing for.

EDIT 2 2021/10/11: This explanation may be wrong, see Amy Labenz's comment here.

Personal anecdote possibly relevant for 2): EA Global 2016 was my first EA event. Before going, I had lukewarm-ish feelings towards EA, due mostly to a combination of negative misconceptions and positive true-conceptions; I decided to go anyway somewhat on a whim, since it was right next to my hometown, and I noticed that Robin Hanson and Ed Boyden were speaking there (and I liked their academic work). The event was a huge positive update for me towards the movement, and I quickly became involved – and now I do direct EA work.

I'm not sure that a different introduction would have led to a similar outcome. The conversations and talks at EAG are just (as a general rule) much better than at local events, and reading books or online material also doesn't strike me as naturally leading to being part of a community in the same way.

It's possible my situation doesn't generalizes to others (perhaps I'm unusual in some way, or perhaps 2021 is different from 2016 in a crucial way such that the "EAG-first" strategy used to make sense but doesn't anymore), and there may be other costs with having more newcomers at EAG (eg diluting the population of people more familiar with EA concepts), but I also think it's possible my situation does generalize and that we'd be better off nudging more newcomers to come to EAG.

Hi Nathan,

Thank you for bringing this up!

1) We’d like to have a larger capacity at EA Global, and we’ve been trying to increase the number of people who can attend. Unfortunately, this year it’s been particularly difficult; we had to roll over our contract with the venue from 2020 and we are unable to use the full capacity of the venue to reduce the risk from COVID. We’re really excited that we just managed to add 300 spots (increasing capacity to 800 people), and we’re hoping to have more capacity in 2022.

There will also be an opportunity for people around the world to participate in the event online. Virtual attendees will be able to enjoy live streamed content as well as networking opportunities with other virtual attendees. More details will be published on the EA Global website the week of October 11.

2) We try to have different events that are welcoming to people who are at different points in their EA engagement. For someone earlier in their exploration of EA, the EAGx conferences are going to be a better fit. From the EA Global website:

Effective altruism conferences are a good fit for anyone who is putting EA principles into action through their... (read more)

- It is unclear to me that if we chose cause areas again, we would choose global developement

- The lack of a focus on global development would make me sad

- This issue should probably be investigated and mediated to avoid a huge community breakdown - it is naïve to think that we can just swan through this without careful and kind discussion

I think the wiki should be about summarising and synthesising articles on this forum.

- There are lots of great articles which will be rarely reread

- Many could do with more links to eachother and to other key peices

- Many could be better edited, combined etc

- The wiki could take all content and aim to turn it into a minimal viable form of itself

A friend in canada wants to give 10k to a UK global health charity but wants it to be tax neutral. does anyone giving to a big charity want to swap (so he gives to your charity in canada and gets the tax back) and you give to this global health one?

UK government will pay for organisations to hire 18-24 year olds who are currently unemployed, for 6 months. This includes minimum wage and national insurance.

I imagine many EA orgs are people constrained rather than funding constrained but it might be worth it.

And here is a data science org which will train them as well https://twitter.com/John_Sandall/status/1315702046440534017

Note: applications have to be for 30 jobs, but you can apply over a number of organisations or alongside a local authority etc.

https://www.gov.uk/government/collections/kickstart-scheme

EA Book discount codes.

tl;dr EA books have a positive externality. The response should be to subsidise them

If EA thinks that certain books (doing good better, the precipice) have greater benefits than they seem, they could subsidise them.

There could be an EA website which has amazon coupons for EA books so that you can get them more cheaply if buying for a friend, or advertise said coupon to your friends to encourage them to buy the book.

From 5 mins of research the current best way would be for a group to buys EA books and sell them at the list price... (read more)

Confidence 60%

Any EA leadership have my permission to put scandal on the back burner until we have a strategy on bing by the way. feels like a big escalation to have an ML reading it's own past messages and running a search engine.

EA internal issues matter but only if we are alive.

Reasons I would disagree:

(1) Bing is not going to make us 'not alive' on a coming-year time scale. It's (in my view) a useful and large-scale manifestation of problems with LLMs that can certainly be used to push ideas and memes around safety etc, but it's not a direct global threat.

(2) The people best-placed to deal with EA 'scandal' issues are unlikely to perfectly overlap with the people best-placed to deal wit the opportunities/challenges Bing poses.

(3) I think it's bad practice for a community to justify backburnering pressing community issues with an external issue, unless the case for the external issue is strong; it's a norm that can easily become self-serving.

EA criticism

[Epistemic Status: low, I think this is probably wrong, but I would like to debug it publicly]

If I have a criticism of EA along Institutional Decision Making lines, it is this:

For a movement that wants to change how decisions get made, we should make those changes in our own organisations first.

Examples of good progress:

- prizes - EA orgs have offered prizes for innovation

- voting systems - it's good that the forum is run on upvotes and that often I think EA uses the right tool for the job in terms of voting

Things I would like to see more... (read more)

I think the community health team should make decisions on the balance of harms rather than beyond reasonable doubt. If it seems likely someone did something bad they can be punished a bit until we don't think they'll do it again. But we have to actually take all the harms into account.

Should I tweet this? I'm very on the margin. Agree disagreevot (which doesn't change karma)

If I find this forum exhausting to post on some times I can only imagine how many people bounce off entirely.

The forum has a wiki (like wikipedia)

The "Criticism of EA Community" wiki post is here.

I think it would be better as a summary of criticisms rather than links to documents containing criticisms.

This is a departure from the current wiki style, so after talking to moderators we agreed to draft externally.

Collaborative Draft:

https://docs.google.com/document/d/1RetcAA7D94y6v3qxoKi_Ven-xF98FjirokvI-g8cKI4/edit#

Upvote this post if you think the "Criticism of EA Community" post will be better as a collaboratively-written summary.

Downvote if you ... (read more)

I did a podcast where we talked about EA, would be great to hear your criticisms of it. https://pca.st/i0rovrat

Should I do more podcasts?

Any time that you read a wiki page that is sparse or has mistakes, consider adding what you were trying to find. I reckon in a few months we could make the wiki really good to use.

I sense that conquest's law is true -> that organisations that are not specifically right wing move to the left.

I'm not concerned about moving to the left tbh but I am concerned with moving away from truth, so it feels like it would be good to constantly pull back towards saying true things.

I am so impressed at the speed with which Sage builds forecasting tools.

Props @Adam Binks and co.

Fatebook: the fastest way to make and track predictions looks great.

I still don't really like the idea of CEA being democratically elected but I like it more than I once did.

Top Forecaster Podcast

I talked to Peter Wildeford, who is a top forecaster and Head of Rethink Priorities, about the US 2024 General Election.

We try to pin down specific probabilities.

Youtube: https://www.youtube.com/watch?v=M7jJxPfOdAo

Spotify: https://open.spotify.com/episode/4xJw9af9SMSmX5N2UZTpRD?si=Dh9RqPwqSDuHj7VpEx_nwg&nd=1

Pocketcasts: https://pca.st/ytt7guj0

With better wiki features and a way to come to consensus on numbers I reckon this forum can write a career guide good enough to challenge 80k. They do great work, but we are many.

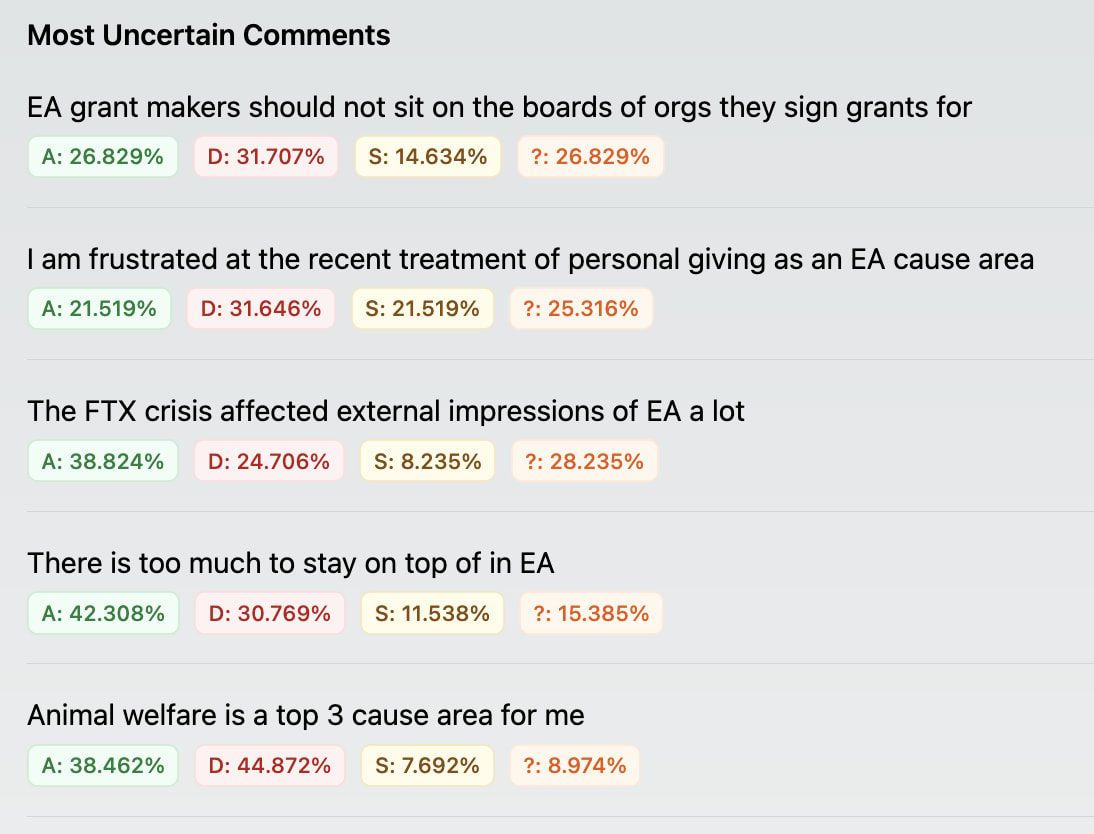

89 people responded to my strategy poll so far.

Here are the areas of biggest uncertainty.

Seems we could try and understand these better.

Poll link: https://viewpoints.xyz/polls/ea-strategy-1

Analytics like: https://viewpoints.xyz/polls/ea-strategy-1/analytics

This perception gap site would be a good form for learning and could be used in altruism. It reframes correcting biases as a fun prediction game.

https://perceptiongap.us/

It's a site which gets you to guess what other political groups (republicans and democrats) think about issues.

Why is it good:

1) It gets people thinking and predicting. They are asked a clear question about other groups and have to answer it.

2) It updates views in a non-patronising way - it turns out dems and repubs are much less polarised than most people think (the stat they give i... (read more)

Space in my brain.

I was reading this article about Nuclear winter a couple of days ago and I struggled. It's a good article but there isn't an easy slot in my worldview for it. The main thrust was something like "maybe nuclear winter is worse than other people think". But I don't really know how bad other people think it is.

Compare this to community articles, I know how the community functions and I have opinions on things. Each article fits neatly into my brain.

If a had a globe my worldview the EA community section is like very well mapped out... (read more)

EA twitter bots

A set of EA jobs twitter bots which each retweet a specific set of hashtags eg #AISafety #EAJob, #AnimalSuffering #EAJob, etc etc. Please don't get hung up on these, we'd actually need to brainstorm the right hashtags.

You follow the bots and hear about the jobs.

I wouldn't recommend people tweet about the nonlinear stuff a lot.

There is an appropriate level of publicity for things and right now I think the forum is the right level for this. Seems like there is room for people to walk back and apologise. Posting more widely and I'm not sure there will be.

If you think that appropriate actions haven't been taken in say a couple months then I get tweeting a bit more.

I imagine that it has cost and does cost 80k to push for AI safety stuff even when it was wierd and now it seems mainstream.

Like, I think an interesting metric is when people say something which shifts some kind of group vibe. And sure, catastrophic risk folks are into it, but many EAs aren't and would have liked a more holistic approach (I guess).

So it seems a notable tradeoff.

I think the forum should have a retweet function but for the equivalent of github forks. So you can make changes to someone's post and offer them the ability to incorporate them. If they don't, you can just remake the article with the changes and an acknolwedgement that you did.

I don't think people would actually do that that often, because they'd get no karma most of the time, but it would give karma, attribution trail for:

- summaries

- significant corrections/reframings

- and the author could still accept the edits later

There were too few parties on the last night of EA global in london which led to overcrowding, stressed party hosts and wasting a load of people's time.

I suggest in future that there should be at least n/200 parties where n is the number of people attending the conference.

I don't think CEA should legislate parties, but I would like to surface in people's minds that if there are fewer than n/200 parties, then you should call up your friend with most amenable housemates and tell them to organise!

Does anyone know people working on reforming the academic publishing process?

Coronavirus has caused journalists to look for scientific sources. There are no journal articles because of the lag time. So they have gone to preprint servers like bioRxiv (pronounced bio-archive). These servers are not peer reviewed so some articles are of low quality. So people have gone to twitter asking for experts to review the papers.

https://twitter.com/ryneches/status/1223439143503482880?s=19

This is effectively a new academic publishing paradigm. If there were support fo... (read more)

Rather than using Facebook as a way to collect EA jobs we should use an airtable form

1) Individuals finding jobs could put all the details in, saving time for whoever would have to do this process at 80k time.

2) Airtable can post directly to facebook, so everyone would still see it https://community.airtable.com/t/posting-to-social-media-automatically/20987

3) Some people would find it quicker. Personally, I'd prefer an airtable form to inputting it to facebook manually every time.

Ideally we should find websites which often publish useful jobs and then scrape them regularly.

My very quick improving institutional decision-making (IIDM) thoughts

Epistemic status: Weak 55% confidence. I may delete. Feel free to call me out or DM me etc etc.