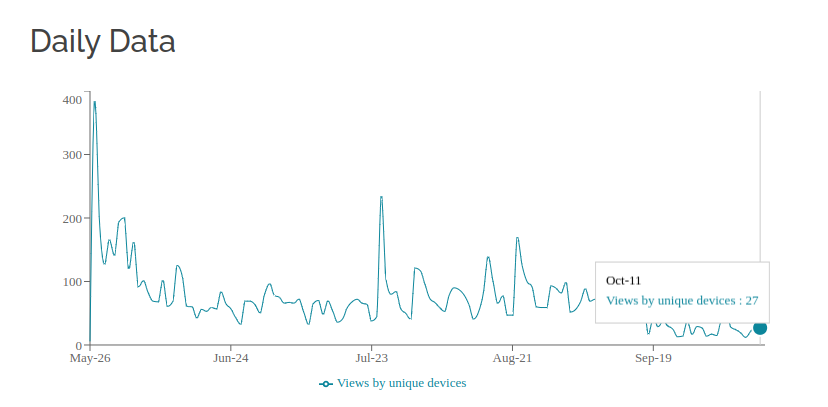

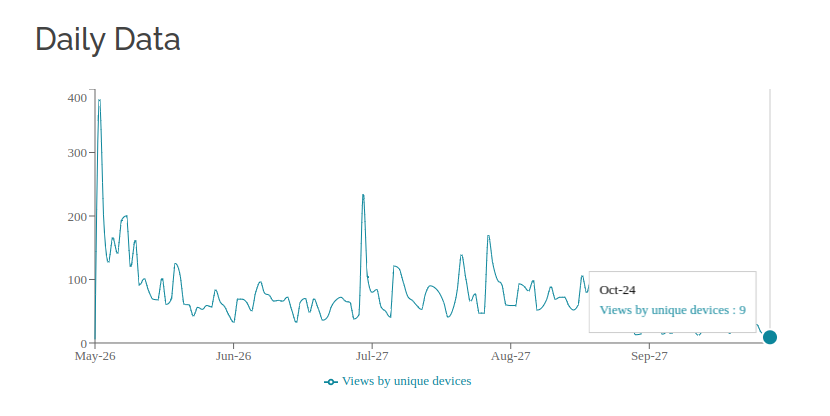

Update: Since September has passed, this thread is now off the frontpage, and few people are looking at it.

It might be a good idea to (also) make a frontpage post with the job listing (open) topic

We’d like to help applicants and hiring managers coordinate, so we’ve set up two hiring threads.

So: consider sharing your information if you are hiring for positions in EA!

Please only post if you personally are part of the hiring company—no recruiting firms or job boards. Only one post per company, per month.

If it isn't a household name, please explain what your company does.[1]

See the latest similar thread on Hacker News for example postings.

This was inspired by the talks around an EA common application, especially this comment. We thought it might be useful to replicate the "Who wants to be hired?" and "Who's hiring?" threads from the Hacker News forum.

Job boards / resources

- 80000 hours job board (spreadsheet version)

- Open job listings on this forum

- EA work club

- EA internships board

- Impact Colabs for volunteer and collaboration opportunities (full page view)

- EA Training Board

- 80000 hours LinkedIn group

- Animal Advocacy Careers job board (spreadsheet version)

- This list of job boards

- EA job postings and EA volunteering Facebook groups

- EA-aligned tech jobs spreadsheet

- Effective Thesis opportunities newsletter

- High Impact Professionals Talent Directory

- Did we forget any? Leave a comment or send us a message and we'll add it here!

This thread is a test

We’re not sure how much it will help, or how we should improve future similar attempts. So if you have any feedback, we’d love to hear it. (Please leave it as a comment or message us.)

See also

The related thread: Who wants to be hired?

- ^

Quoted from the Hacker News thread.

The evaluations project at the Alignment Research Center is looking to hire a generalist technical researcher and a webdev-focused engineer. We're a new team at ARC building capability evaluations (and in the future, alignment evaluations) for advanced ML models. The goals of the project are to improve our understanding of what alignment danger is going to look like, understand how far away we are from dangerous AI, and create metrics that labs can make commitments around (e.g. 'If you hit capability threshold X, don't train a larger model until you've hit alignment threshold Y'). We're also still hiring for model interaction contractors, and we may be taking SERI MATS fellows.